So by that logic, we should simply give up in proping ROCm because being so new it doesnt deserve the chance to grow?

Who is "we"? The programmers? Yeah, the ones that aren't AMD funded already gave up on AMD. It's why Nvidia CUDA is the standard, the default. You can choose not to give up on AMD, I can review AMD cards, but the boots on the ground people that want the most bang for the buck for AI? They're generally going to go with Nvidia. I'm not saying that's good or bad, it's just the way things work. You don't gain market share by being worse / less powerful than the leader.

Lastly, in case that you havent noticed, blindly pushing for nvidia has us in the current mess of way overpriced gpus, plus a very dangerous precedence in pushing proprietary lock-in tech like dlss that its killing the openness of the pc gaming platform.

I'm not blindly pushing for Nvidia, or AMD, or Intel. I'm laying out the current market situation and technology situation. Like it or hate it, Nvidia GPUs have more features and capability than AMD, at basically every price point, unless the only thing you want is rasterization performance.

I've been around long enough to have personally experienced the transitions from software rendering to early 3D accelerators/decelerators to transform and lighting, bump mapping, shadow mapping, tessellation, pixel and vertex shaders, and now we're into RT and AI tech. Every one of those had naysayers complaining that it wasn't necessary and didn't really help and we should just do more of what we already had.

Frankly, while RT can do some cool stuff, the real potential is with RT and AI-assisted rendering techniques. I said as much when Nvidia first revealed the RTX 20-series, that the tensor cores had the potential to affect things far more than the RT hardware. It's taking time, just like it always does, but AI isn't just a meaningless buzzword these days.

Why dont you ask nvidia why they dont dare in offering dlss in the same way that AMD is offering FSR, you know, to as many GPUs as possible?

Why don't you create an algorithm that leverages hardware that the competition doesn't have, to do things the competition can't do, and then spend a ton of time and effort on training and refining that algorithm, and then figure out how to make it run on devices that don't have the capacity to run it?

As I noted in the article, a universal AI-based upscaling algorithm sounds like a nice idea. Just like a lot of political ideas sound nice in theory. In practice, it's probably never going to happen. We may as well ask why ChatGPT didn't just give away all of its trained models and software for free. (And yes, there are things that are

like ChatGPT that are free, like a bunch of Hugging Face models, but none of that stuff is anywhere near ChatGPT in practice has been my experience.)

The irony is that, without Nvidia forging ahead, there are a bunch of things that are now becoming commonplace that wouldn't exist. G-Sync came first and paved the way, FreeSync followed. DLSS came first, FSR and FSR 2 followed. Reflex preceded Anti-Lag. DLSS 3 Frame Gen came out a year in advance of publicly available FSR 3. And it's not always Nvidia, I get that. Mantle came before DX12 and eventually morphed into Vulkan. I'm sure there are other non-Nvidia examples.

But again, love it or hate it, Nvidia is throwing its full weight into graphics and AI technologies. Looking at stock prices, company valuations, and the latest financial reports, what Nvidia has been doing is working out very well for the company. Gamers can complain, but any person with a sense of business models can't fault Nvidia for what it's doing.

I know a lot of people — I get asked for advice all the time by friends / family / others — who play games, where the default attitude is "buy Nvidia GPUs." Almost every time I suggest the possibility of an AMD GPU to such people, I get pushback. "I thought Nvidia was better," or "I don't really trust AMD and would prefer Nvidia," or "I'm not worried about an Nvidia monopoly, because it still makes better hardware." They're not wrong.

AMD hardware isn't bad, but I can't immediately point to one thing where it's leading from the front in the GPU realm right now. Which of course is hard to do when you're ~20% of the market. And this is why AMD (and even Intel) are pushing open source solutions. They

have to, because they're minor players right now. For Nvidia, I can point to ray tracing, AI, and upscaling as three concrete examples of technology and techniques that it established, and not surprisingly it continues to lead in those areas.

In other words, if AMD wants to get ahead of Nvidia, it actually has to

get ahead of Nvidia. It can't follow and thereby become the leader. Drafting (like you see in cycling or running or other sports) doesn't work in the business world. AMD needs to figure out a way to leapfrog Nvidia. And that's damn difficult to do, obviously. The last time AMD had a clear technology leading design was probably with the Radeon 9800 Pro, where it established its hardware as being clearly superior in every meaningful metric. It wasn't just the value alternative, it was the leader.

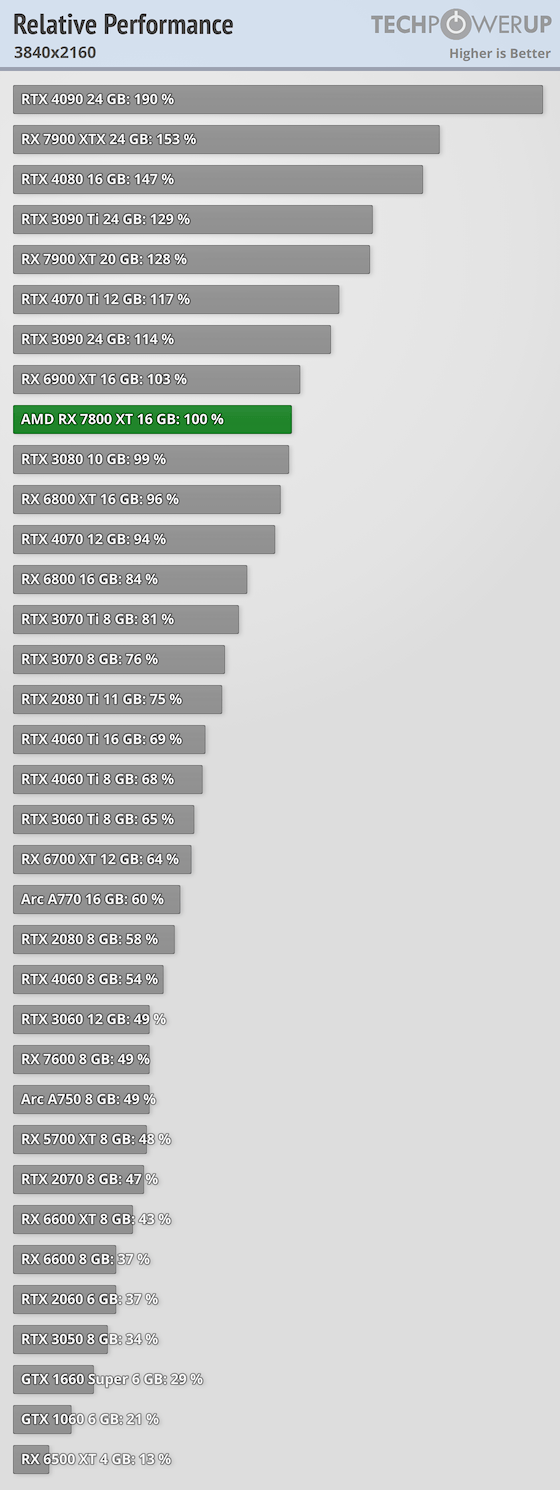

So yeah, if I knew how to take AMD and get it ahead of Nvidia, I would be seriously underselling myself by working as a tech journalist. The same applies to anyone on these forums. We talk, analyze, argue, etc. but no one actually has solutions. Even the pricing stuff, it might sound great to us to imagine an RX 7800 XT selling for $400, but if that actually happened? I'm not even sure what the result would be. Maybe Intel Battlemage will give us a better idea, because at least Intel seems willing to take a short-term loss in order to gain market share.