Avro Arrow

Splendid

What is? The fact that AMD is trying to deceive people or the fact that someone like me, an overt hater of both Intel and nVidia, is willing to call them on it?It's so ridiculous.

Not for people who have a clue like most people on this forum, but we represent a tiny minority of PC gamers. Most PC gamers wouldn't know a GTX 1660 Super from an RX 6600.Only performances and price should matter, the name doesn't change anything.

One day last winter, I was in a Canada Computers, waiting in a huge line at the internal parts counter (Storage/CPU/GPU/RAM/Motherboards). To pass the time, I was talking to a young couple who wanted a new video card. They said they had a budget of $1,000 and I asked them what they were looking to get. They said they were thinking of getting an RTX 3070 Ti. I asked them what their display was and they had some Acer (or ASUS, I can't remember the model #) but I looked it up the specs on my phone and it was just a 1080p60Hz monitor with no GSync or FreeSync.

I asked them why they wanted to spend $1,000 on a card for 1080p60Hz gaming? They said that they wanted it to last for more than five years. I looked on the video card wall behind the counter and saw an ASRock RX 6700 XT Challenger D for over $300 less. I asked them what game they liked to play and they initially said things like CS:GO, Fortnite and COD:WZ and I told them that the GTX 1660 Super that they already had would play those games just fine at 1080p. I asked if there were any new AAA titles that they wanted to play and they both agreed that they wanted to play CP2077.

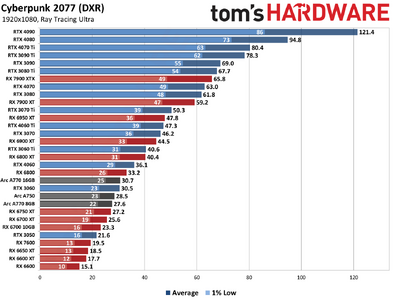

So, I found a performance table that had both cards listed at 1080p (on Guru3D) and it showed that the RTX 3070 Ti was only 3FPS behind the RTX 3070 Ti at 1080p Ultra:

So I showed them this and asked them if those extra 3FPS were worth $100 each. Their eyes got wide but then the girl piped up (I'll type it in script form to simplify the conversation):

The Girl: "Oh wait, but my PC has an Intel CPU."

Me: "Is that a problem?"

The Girl: "But, isn't that card for AMD computers? It says AMD on the box."

Me: "What? No. You can use any brand video card with any brand CPU."

The Girl: "Why does it say AMD on it?"

Me: "Oh, that's because AMD makes Radeon video cards. They bought ATi years ago."

The Guy: "Oh, that's an ATi card?"

Me: "Essentially, yes."

The Guy to the Girl: I had an ATi card long ago. They're good!

The couple thanked me and purchased the RX 6700 XT. I told them about using DDU and showed the guy on his phone the Guru3D DDU page with usage instructions. I felt pretty good about saving them the $300 but it also blew my mind that someone could assume that just because a video card says "AMD" on it that it was only for PCs with AMD CPUs. I mean, they don't assume that nVidia cards are only for PCs with nVidia CPUs so... yeah.

I realised that day just how tech-ignorant that the general public is and with that realisation came the knowledge about just how vulnerable the average consumer is to deceptive marketing tactics. It also validated my criticism of AMD back when they dropped the ATi branding because they were shooting themselves in the foot to stroke their own egos. People recognised the ATi name in video cards but nobody knew WTH an AMD video card was and, for the most part, they still don't.

It's like how Hisense bought Sharp's TV division. They didn't rebrand all the Sharp TVs as Hisense because replacing an established, well-recognised and respected brand with you own corporate logo is very well-known as being one of the worst courses of action possible. For most people, buying a PC is like buying a washing machine to us. Without the names on them, we wouldn't be able to tell a Whirlpool from a GE or a Bosch.

The current market is terrible so you're not setting the bar very high.In the current market this card is good.

Sure it's way less expensive than the RX 6800 XT was at launch and it had better be because it's THREE YEARS later and it's not really any faster.It didn't lose anything, the launch price is way less expensive that 6800 xt, less power consumption and few things are added like av1

It's clear that you don't understand something so I'll explain it to you. The performance of a card puts it into a certain tier. For the generation that follows it, a card with similar performance as the first card (RX 5700 XT) will be in a lower tier in the new generation and will still usually outperform it slightly at a lower price. That's how it has worked since the beginning over 30 years ago.

Examples:

RX 6600 = RX 5700+4%

RX 6600 XT = RX 5700 XT+10%

RTX 3070 Ti = RTX 2080 Ti+12%

Generational uplifts:

RX 6600 XT = RX 5600 XT+28%

RX 6700 XT = RX 5700 XT+35%

RTX 3080 = RTX 2080+36%

GTX 1080 = GTX 980+51%

GTX 980 = GTX 780+38%

R9 Fury = R9-290+26%

The bare minimum acceptable performance uplift is 25% gen-over-gen.

What have been the two worst releases in GPU history? Oh yeah, the RX 6500 XT and RTX 4060 Ti. I'm guessing that you haven't been paying attention but here's the biggest reason why they were panned:

RX 6500 XT = RX 5500 XT+1% <- Should've been called the RX 6400

RTX 4060 Ti = RTX 3060 Ti+10% <- Should've been called the RTX 4050 Ti

By the same token:

RX 7700 XT = RX 6700 XT+22% <- Not great, should be called RX 7700

RX 7800 XT = RX 6800 XT+5% <- Even worse than the RTX 4060 Ti

So, sure, the price is better but it's not amazing.

Consider the RX 7700 XT because before the silicon shortage, a level-7 XT card had an MSRP of $399 (RX 5700 XT). That ballooned up to $479 during the pandemic and is now at $449. So, they managed to increase the MSRP by 12.5% over the past 4 years. THIS is why the RX 7700 XT is overpriced. If it were set to $400 like it should be, it would be properly-positioned in the market. As for the RX 7800 XT, it offers great value for $500 in today's market but it's completely out-of-place performance-wise.

AMD has been fudging A LOT of numbers lately.