My point was why pay over retail for an AMD 5000 CPU right now when Intel CPUs, much cheaper and available now, provide mostly the same game performance above 1080p?

( the same really couldn't be said for AMD 3000 series CPUs)

Actually yes, at resolutions higher than 1080p, gaming performance has been rather similar between AMD and Intel for years, so long as a sufficient number of threads are available, since we're talking about resolutions that will typically be limited by the graphics card in modern AAA games, not the CPU. Unless perhaps one is seeking a little higher frame rates on a high refresh rate monitor for competitive e-sports titles, the differences between any recent mid-range or better processors will tend to be largely imperceptible. I agree that people shouldn't be paying the significantly marked-up pricing from third-party sellers for Ryzen 5000 processors though.

So? Even if you put that much power through an AMD 5000 series CPU, apparently it cannot do anywhere near 5.3 ghz on all cores. So, my point stands that AMD is behind in overclocking.

The clock rates themselves don't really matter much compared to the actual amount of performance that is available, and the higher IPC of the current Ryzen chips means they can match or often exceed that level of performance at lower clock rates. The Intel processors do have more overclocking headroom, but that simply means that Intel isn't fully utilizing their capabilities out of the box, which is arguably less desirable, but necessary to keep their power levels reasonable. So while those Intel chips do leave more performance on the table, that's not exactly a positive feature, since it would arguably be better if they could get that extra performance without the need for overclocking.

So, I don't see why you are worried so much about power?

If a component ends up drawing an extra hundred dollars or more of electricity over the course of its use, that's certainly relevant in terms of price comparisons. It could mean the difference in being able to move up to the next higher tier of component for the more efficient parts, for example, or putting that money elseware into the system.

And more importantly, higher power draw affects more than just the price paid for the electricity it uses. That extra power translates directly to extra heat being put out by the component. So, one will likely need a larger, more expensive CPU cooler, and in general temperatures inside the case will be higher unless they upgrade the cooling system, and can potentially mean more noise.

Plus, once that extra heat is exhausted from the system, it will warm the room and can have some additional impact on home cooling costs. That could actually be beneficial to one who lives in a cool climate, and doesn't pay to cool their house in the summer. But for someone living in a warmer climate, having additional heat pumped into their room is not going to be ideal, whether its due to increased air conditioning costs, or less comfortable room temperatures.

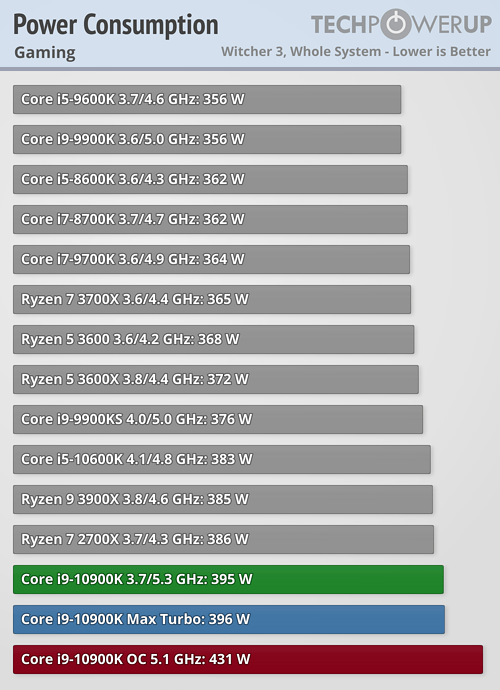

As seen in this chart, a complete system with a 10900K overclocked all core to 5.1Ghz pulls only 46 more watts than a system with a 3900x in gaming.

Adjusting your calculation for a 46 watt difference results in an annual electricity bill difference of $17.46. Also keep in mind, the 10900k will be faster than a 3900x in gaming, so you'll be paying less than $20 a year more for better performance.

Yeah, I wouldn't expect the maximum difference in power draw to make as much of a difference unless one is doing something like rendering or encoding video all day. But why are you comparing a 10900K against a 3900X for gaming? They don't even have the same core counts, and the extra cores of 10 and 12-core processors are not particularly relevant to today's games, and probably won't be for years to come. A better comparison would be a 5800X compared to a 10700K. That system with a 5800X draws around 364 watts in TechPowerUp's gaming power test, while the 5.1GHz OC 10700K drew 407 watts. That's a similar power difference to what you mentioned, but we are only talking about a 5.1GHz OC there, not the previously suggested 5.3GHz.

There are also a lot of vagaries involved with TechPowerUp's CPU testing methodology that are not outlined in their reviews. Naturally, the results for those full-system measurements will be affected by the use of different motherboards for different processors, and even for the 5800X they still tested with an X570 board, despite the newer B550 models providing a more comparable feature-set to Intel's Z490, while having a more efficient chipset than the PCIe 4.0 one used for X570. So it's not exactly an apples-to-apples comparison. And what resolution are they performing their gaming power test at? The reviews don't seem to specify. And is The Witcher 3 even still a relevant game to be testing CPU power draw with? It's well over 5 years old at this point, and came out at a time when 4-core, 8-thread processors were considered high-end, so thread utilization is probably not comparable to many newer titles. And that's a problem with providing a CPU power test based on a single game. It might be relevant for that game, but could be significantly different in other games utilizing more or fewer threads. As a result, those charts might not be particularly meaningful,