and the sample size of the Ryzen 7 5800X3D is just "1" only ONE! SAMPLE SIZE IS JUST ONE ! . well it was the other day when i was on here !Yes, it could be a poorly configured system. Or, it could be that there is a pretty wide variation in the quality of the 5800X3D. If reviewers got their sample from AMD they were likely cherry-picked to show the best possible performance.

So let's say that the 5800X3D is 6% slower than the 5800X in single-thread performance. The 5800x scores 3,486 with a whopping 4314 samples, so it should be very accurate. So doing the calculation, 3,486 * 0.94x (100% - 6%) of that is 3,277. And since the 12900ks scores 4,308, it makes the 12900ks "only" 31.5% faster in single-threaded performance.

Fair enough?

-

Amazon Prime Day - Best SSD Deals 2025 — Find Samsung, WD, Crucial, and other Prime SSD bargains!

-

Amazon Prime Day - Best CPU Deals 2025 — Save on AMD and Intel processors!

Intel & AMD Processor Hierarchy

Page 7 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

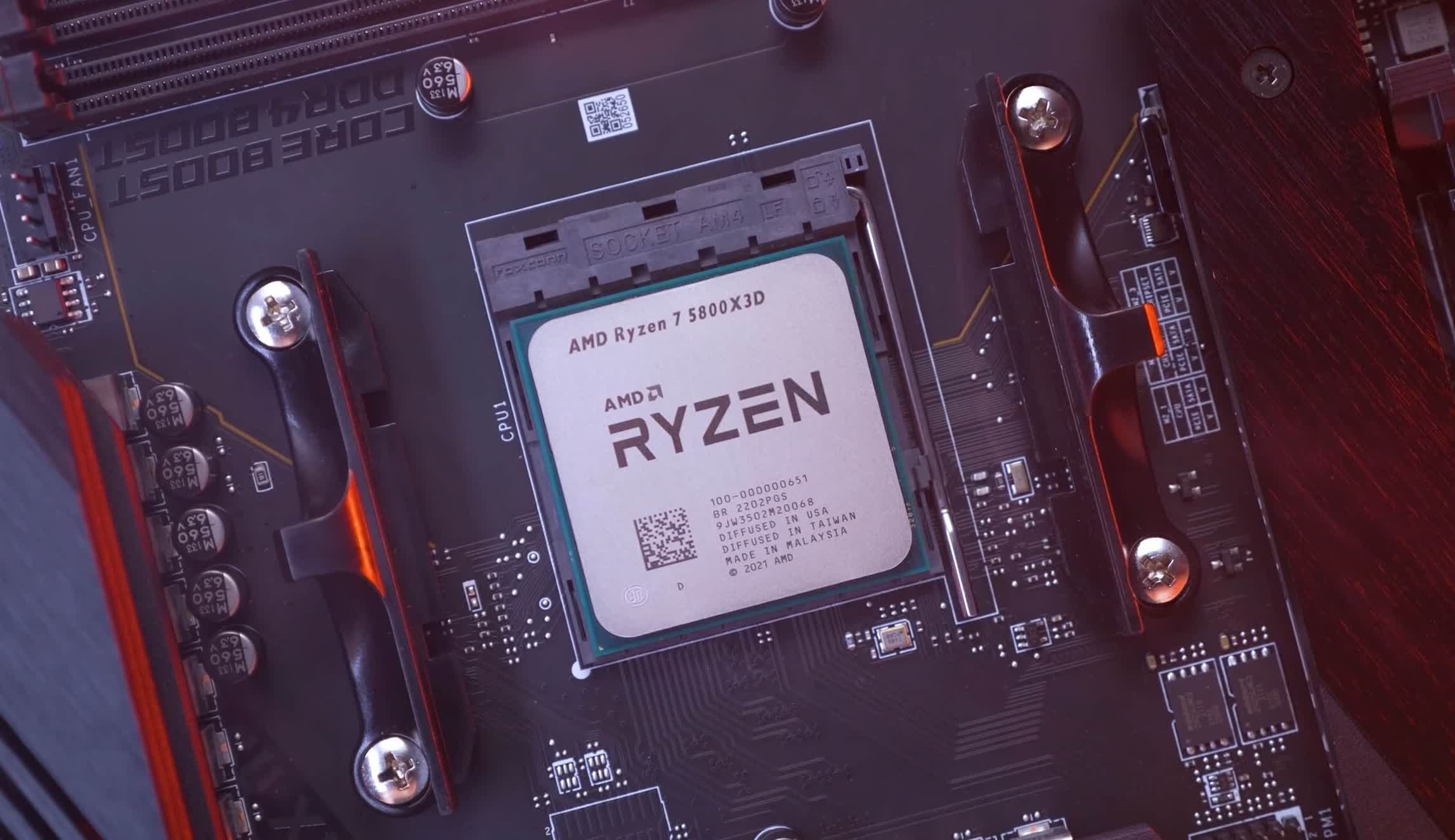

It has been a little while now, 11 days infact, since your Ryzen R7 5800X3d review - @PaulAlcorn

Is there any particular reason the 5800X3D isn't listed on your 'Intel and AMD Gaming CPU Benchmarks Hierarchy'?

Is there any particular reason the 5800X3D isn't listed on your 'Intel and AMD Gaming CPU Benchmarks Hierarchy'?

Last edited:

i dont think they could be bothered with retesting it in win10 cos the 12900k/ks runs crap in it !The chart specifically says '...Gaming CPU Benchmark'.

The article mentions the 5800X3D.

The Windows 11 graph shows the 5800X3D on top.

The Windows 10 graph doesn't show it at all...?

I guess they didn't finish testing the 5800X3D on Windows 10...? That's one Hell of an omission though. If you are actually mentioning a new CPU in the article, one that is supposed to take the top spot in gaming performance, you should finish all your testing before you decide to just leave it off the charts/lists without any explanation whatsoever.

Last edited:

M42

Reputable

There are 11 samples today, with an average score of 2815, and it needs 462 more points (16%) to reach the calculated 3277 score, which is still 6% slower than the 5800x.and the sample size of the Ryzen 7 5800X3D is just "1" only ONE! SAMPLE SIZE IS JUST ONE ! . well it was the other day when i was on here !

but its gone up 300 points in a few days ! Trending in the right direction!There are 11 samples today, with an average score of 2815, and it needs 462 more points (16%) to reach the calculated 3277 score, which is still 6% slower than the 5800x.

M42

Reputable

There are 13 scores this morning, the two newest scores drop the average by 49 points to 2766. One possibility is that users are replacing their CPUs in their older systems and not upgrading the memory or cooling, and the latter may cause the 5800X3D to throttle early.but its gone up 300 points in a few days ! Trending in the right direction!

Been 13 days now and RYZEN R7 5800X3D is still not listed at the top of the chart. Infact its not listed anywhere.

Come on Tomshardware lift your game !

Intel 12900K was up there the same day it was reviewed and you guys also boasted how it had also just become your new test rig!

Just more Intel industry bias !

Its a money driven cancer that is deeply ingrained and has taken over !

Its everywhere!

Come on Tomshardware lift your game !

Intel 12900K was up there the same day it was reviewed and you guys also boasted how it had also just become your new test rig!

Just more Intel industry bias !

Its a money driven cancer that is deeply ingrained and has taken over !

Its everywhere!

JarredWaltonGPU

Splendid

Paul is busily testing the Ryzen 7 5700X and probably just hasn't taken time to update all the charts and text for the "best CPUs" article. It's also important to note that the Ryzen 7 5800X3D sold out almost immediately and isn't currently available for anywhere close to the $449.99 SEP. I see "one left in stock" on Amazon for over $600, or else you have to take your chances with eBay scalpers. Anyway, I've reminded Paul that the natives are restless over the missing 5800X3D and I'm sure we'll get updated charts in the near future.Been 13 days now and RYZEN R7 5800X3D is still not listed at the top of the chart. Infact its not listed anywhere.

Come on Tomshardware lift your game !

Intel 12900K was up there the same day it was reviewed and you guys also boasted how it had also just become your new test rig!

Just more Intel industry bias !

Its a money driven cancer that is deeply ingrained and has taken over !

Its everywhere!

We are definitely concerned that, much like the Ryzen 3 3300X was basically unavailable for any reasonable price after it launched, the 5800X3D could end up being a similar story. The cost of the extra chip stacking and cache is right around $100, supposedly, which means AMD's margins on the 5800X3D are no better than on the 5800X, and in fact the added time spent in packaging and such might make it a less profitable chip to manufacture. It still needs to be listed in our charts and such, and if you can find one for a reasonable price it's a very fast gaming chip. Is it the best gaming CPU overall? If all you care about is gaming, perhaps, but a lot of people would likely sacrifice the <5% or so in performance (at higher resolutions) and get a more well-rounded CPU, especially if the CPU is actually available to buy in quantity.

Yes maybe I have gone a bit hard but mark my words there is definately some truth to them.Dude, you gotta chill. It'll get there.

Thank you for your reply.Paul is busily testing the Ryzen 7 5700X and probably just hasn't taken time to update all the charts and text for the "best CPUs" article. It's also important to note that the Ryzen 7 5800X3D sold out almost immediately and isn't currently available for anywhere close to the $449.99 SEP. I see "one left in stock" on Amazon for over $600, or else you have to take your chances with eBay scalpers. Anyway, I've reminded Paul that the natives are restless over the missing 5800X3D and I'm sure we'll get updated charts in the near future.

We are definitely concerned that, much like the Ryzen 3 3300X was basically unavailable for any reasonable price after it launched, the 5800X3D could end up being a similar story. The cost of the extra chip stacking and cache is right around $100, supposedly, which means AMD's margins on the 5800X3D are no better than on the 5800X, and in fact the added time spent in packaging and such might make it a less profitable chip to manufacture. It still needs to be listed in our charts and such, and if you can find one for a reasonable price it's a very fast gaming chip. Is it the best gaming CPU overall? If all you care about is gaming, perhaps, but a lot of people would likely sacrifice the <5% or so in performance (at higher resolutions) and get a more well-rounded CPU, especially if the CPU is actually available to buy in quantity.

But you are saying Paul is busy and has not had time to update it (almost 2 weeks) which is a long time to test the 5700x . Secondly you talk about poor availability and pricing of the 5800X3D but that has absolutely nothing to do with not having the review findings added to cpu heirarchy list !

You have tested it and got your results and not added it to the heirarchy list !

I guess I will just keep waiting everyday to see when it is added !

M42

Reputable

One reason for the delay could be this: https://www.tomshardware.com/news/gaming-worse-on-linux-ryzen-5800x3dYes maybe I have gone a bit hard but mark my words there is definately some truth to them.

Thank you for your reply.

But you are saying Paul is busy and has not had time to update it (almost 2 weeks) which is a long time to test the 5700x . Secondly you talk about poor availability and pricing of the 5800X3D but that has absolutely nothing to do with not having the review findings added to cpu heirarchy list !

You have tested it and got your results and not added it to the heirarchy list !

I guess I will just keep waiting everyday to see when it is added !

JarredWaltonGPU

Splendid

There's no truth to your claims whatsoever. In fact, your whole assertion that the CPU Hierarchy hasn't been updated is wrong. I asked Paul about it and he said, "They've been in there for a while."Yes maybe I have gone a bit hard but mark my words there is definately some truth to them.

Thank you for your reply.

But you are saying Paul is busy and has not had time to update it (almost 2 weeks) which is a long time to test the 5700x . Secondly you talk about poor availability and pricing of the 5800X3D but that has absolutely nothing to do with not having the review findings added to cpu heirarchy list !

You have tested it and got your results and not added it to the heirarchy list !

I guess I will just keep waiting everyday to see when it is added !

I checked the hierarchy, and sure enough, the Ryzen 7 5800X3D sits at the top of the gaming performance charts. More importantly, the article was last updated April 16, when I corrected a couple of typos, and Paul's last edit where he added the 5800X3D charts was on April 16 at 7:01 am. So what exactly are you talking about here? The Ryzen 7 5700X review should go up soon, and it will also get added to the charts.

Next time I'll just ignore your shouting about things that aren't even correct I guess. Don't be the boy who cried wolf. Here's the proof:

alceryes

Splendid

I think he was referring to the Windows 10 graph and the Windows 10 text hierarchy (scroll down a bit from the one you listed).There's no truth to your claims whatsoever. In fact, your whole assertion that the CPU Hierarchy hasn't been updated is wrong. I asked Paul about it and he said, "They've been in there for a while."

I checked the hierarchy, and sure enough, the Ryzen 7 5800X3D sits at the top of the gaming performance charts. More importantly, the article was last updated April 16, when I corrected a couple of typos, and Paul's last edit where he added the 5800X3D charts was on April 16 at 7:01 am. So what exactly are you talking about here? The Ryzen 7 5700X review should go up soon, and it will also get added to the charts.

Next time I'll just ignore your shouting about things that aren't even correct I guess. Don't be the boy who cried wolf. Here's the proof:

I can understand being busy but the context timing is a bit suspicious. We all know that Paul was aware that the 5800X3D was supposed to take the top CPU spot in gaming performance. Paul even mentions the 5800X3D earlier in the article. By general assumption, these charts were going to just reinforce what we already knew about the CPU, and yet the 5800X3D was left off all Windows 10 charts.

Paul should've waited until he had a complete picture or at least put a one-liner in there saying that his 5800X3D testing was not yet complete. Instead, you have neophytes and novices hitting these charts, thinking that Intel's top CPU still holds the gaming performance crown - which is 100% false.

Edit - Unless the 5800X3D was specifically left off these charts, on purpose, to create more banter (and by extension page hits). In which case I say shame on you - bad form!

Last edited:

JarredWaltonGPU

Splendid

Windows 10 testing might be getting phased out. Testing every CPU on two different OSes is a major time sink, and I know personally I would refuse to do it at this point. From the review:I think he was referring to the Windows 10 graph and the Windows 10 text hierarchy (scroll down a bit from the one you listed).

I can understand being busy but the context timing is a bit suspicious. We all know that Paul was aware that the 5800X3D was supposed to take the top CPU spot in gaming performance. Paul even mentions the 5800X3D earlier in the article. By general assumption, these charts were going to just reinforce what we already knew about the CPU, and yet the 5800X3D was left off all Windows 10 charts.

Paul should've waited until he had a complete picture or at least put a one-liner in there saying that his 5800X3D testing was not yet complete. Instead, you have neophytes and novices hitting these charts, thinking that Intel's top CPU still holds the gaming performance crown - which is 100% false.

Edit - Unless the 5800X3D was specifically left off these charts, on purpose, to create more banter (and by extension page hits). In which case I say shame on you - bad form!

"Aside from a few errant programs for Intel, the overall trends for both AMD and Intel should be similar with Windows 10 and 11. As such, we're sticking with Windows 11 benchmarks in this article. We also stuck with DDR4 for this round of Alder Lake testing, as overall performance trends are generally comparable between DDR4 and DDR5. We have a deeper dive into what that looks like in our initial 12900K review."

So, Paul doesn't have Win10 benchmarks because that's now the "old" platform and will likely no longer be maintained. I did the same thing with graphics cards a few months back. Every GPU now gets tested with Win11, the Win10 data is provided for cards released before the switch (meaning Jan 2022 or earlier), but I'm not going to go do additional testing of RTX 3090 Ti with the previous Core i9-9900K testbed. We did look at Win10 vs. Win11 performance in the past, and most variability wasn't significant. AMD with fTPM had some stuttering that's supposed to be fixed now (or soon), Alder Lake needs Win11 scheduling, etc. If you don't want Windows 11, don't get an Alder Lake CPU that has E-cores.

alceryes

Splendid

Windows 10 testing might be getting phased out. Testing every CPU on two different OSes is a major time sink, and I know personally I would refuse to do it at this point. From the review:

It would've been great if Paul had added a quick quip saying such.

Calling the Windows 10 portions 'legacy' and adding a one-liner saying that the Windows 10 benchmarks, charts, and performance lists won't be updated anymore, could've cleared up the confusion easily.

I just feel that it should've been stated, clearly, on the new CPU hierarchy article.Every GPU now gets tested with Win11, the Win10 data is provided for cards released before the switch (meaning Jan 2022 or earlier), but I'm not going to go do additional testing of RTX 3090 Ti with the previous Core i9-9900K testbed. We did look at Win10 vs. Win11 performance in the past, and most variability wasn't significant. AMD with fTPM had some stuttering that's supposed to be fixed now (or soon), Alder Lake needs Win11 scheduling, etc. If you don't want Windows 11, don't get an Alder Lake CPU that has E-cores.

I also think that, in the long run, this move will hurt Tom's readership. You wrote an article that is focused on PC gaming performance and yet decided to no longer add new hardware metrics for the OS that 75+% of Steam gamers still use(?) ...but, hey, powers that be, right?

JarredWaltonGPU

Splendid

It's the price of "progress." Microsoft locked the Alder Lake scheduling improvements to Win11, so it's basically required. Outside of ADL, most games and apps don't really change in performance with Win10 vs. Win11 — like less than a 2% difference is what I recall. There will probably be a few exceptions, and I get that Win10 remains more popular than Win11. Win8 was more popular than Win7 but most tech sites migrated to Win8 by the time 8.1 came out. You can't test everything, every time, so when you test generational OS performance and don't find any major issues, newer versions almost always get used. I still run Win10 on my personal PCs, but Win11 is what nearly all new PCs will come with.I also think that, in the long run, this move will hurt Tom's readership. You wrote an article that is focused on PC gaming performance and yet decided to no longer add new hardware metrics for the OS that 75+% of Steam gamers still use(?) ...but, hey, powers that be, right?

Yes maybe I have gone a bit hard but mark my words there is definately some truth to them.

No. There's no grand anti-AMD conspiracy here. Full stop.

alceryes

Splendid

Do you have additional info on this or are you just referencing the status quo?It's the price of "progress." Microsoft locked the Alder Lake scheduling improvements to Win11, so it's basically required.

Much of the thread director is in the Windows 10 kernel - just dormant. I'm hoping that they eventually enable it fully.

JarredWaltonGPU

Splendid

I'm sure Microsoft doesn't want to enable the full Thread Director on Win10, because that would remove one major reason to upgrade. Technically, as a programmer, there's no good reason the new scheduler can't be ported to Windows 10. After all, Windows 11 is really just Windows 10 with a new coat of paint and a bunch of extra context menus and such. But just like DirectX 12 wasn't backported to Win7 for a while, there's no reason for MS to rush to support TD on Win10 — and Windows 8/8.1 never did get DX12 support.Do you have additional info on this or are you just referencing the status quo?

Much of the thread director is in the Windows 10 kernel - just dormant. I'm hoping that they eventually enable it fully.

This is what i am talking about . "It is the main thing that gets looked at in my opinion ."There's no truth to your claims whatsoever. In fact, your whole assertion that the CPU Hierarchy hasn't been updated is wrong. I asked Paul about it and he said, "They've been in there for a while."

I checked the hierarchy, and sure enough, the Ryzen 7 5800X3D sits at the top of the gaming performance charts. More importantly, the article was last updated April 16, when I corrected a couple of typos, and Paul's last edit where he added the 5800X3D charts was on April 16 at 7:01 am. So what exactly are you talking about here? The Ryzen 7 5700X review should go up soon, and it will also get added to the charts.

Next time I'll just ignore your shouting about things that aren't even correct I guess. Don't be the boy who cried wolf. Here's the proof:

View attachment 121

View attachment 122

When you log in, you look at the big list and see whats on top and work your way down !

12900k is at top and 5800X3D is not on it! yes its its Win10 !

The benchmarks are there above it as you have posted with 5800X3D, but that is not the Gaming Cpu Heirarchy List .

I know its Windows 10 and you have said above you wont be doing anymore Win10, but where is a Win 11version?

Intel and AMD Gaming CPU Benchmarks Hierarchy

Gaming CPU Benchmarks Hierarchy - Windows 10

M42

Reputable

Just to follow up on the 5800X3D's single-thread performance, its performance with 21 Passmark submissions is now 2,843 and the margin for error is now "low".

In the same benchmark, the i9-12900ks today has a single-thread score of 4,334, making it about 52% faster in single-thread performance. The 5800X's single-thread performance is 3,485 and is 22.6% faster. Even the 5800 scores 19% faster with a 3,386.

In the same benchmark, the i9-12900ks today has a single-thread score of 4,334, making it about 52% faster in single-thread performance. The 5800X's single-thread performance is 3,485 and is 22.6% faster. Even the 5800 scores 19% faster with a 3,386.

cryoburner

Judicious

And that should just support prior suggestions that synthetic benchmarks like Passmark should not be relied upon to provide an accurate representation of performance in real-world applications, as sometimes their scores will see more benefit from certain hardware architectures than from others, in ways that won't always align with other software.Just to follow up on the 5800X3D's single-thread performance, its performance with 21 Passmark submissions is now 2,843 and the margin for error is now "low".

In the same benchmark, the i9-12900ks today has a single-thread score of 4,334, making it about 52% faster in single-thread performance. The 5800X's single-thread performance is 3,485 and is 22.6% faster. Even the 5800 scores 19% faster with a 3,386.

And again, the scores don't make much sense even going by the small difference in clock rates compared to the architecturally similar 5800X, unless there are a lot of poorly configured 5800X3D systems among those submissions. Looking at the distribution of individual 5800X3D results, their overall CPU Mark scores are separated between a relatively tight grouping of higher scoring results, clearly the properly configured systems, and a separate much slower grouping of results, with the group of properly configured systems performing on-average over 25% faster than the misconfigured systems that are holding back results. And while Passmark might want you to believe that 21 submissions from random online users is enough to provide a "low" margin of error, that's complete nonsense. We don't know who tested these systems. Someone could have tested a number of different RAM configurations to see how they affect performance, or an early OEM prebuilt system could be getting sold with slow, single-channel memory, or someone could even be purposely submitting misconfigured results to manipulate the numbers, for all we know.

As for the possibility of these mixed results being the norm, that doesn't appear to be supported by another synthetic benchmarking site that, despite being very obviously anti-AMD right down to their CPU descriptions, shows expected results relative to the 5800X. According to their database of 304 user test runs, the single-threaded performance of the 5800X is only 6% faster than the 5800X3D, as might be expected for an application not benefiting from the additional cache. Passmark is likely a more reputable site, even if they get less traffic, but that's still more evidence suggesting that the current Passmark results are not being representative of real-world performance, and that they are at the very least getting thrown off by a number of questionable submissions affecting their small number of samples.

JarredWaltonGPU

Splendid

My bet would be someone has the Power Saving profile enabled rather than using the default Balanced profile. It's either that or someone has seriously fubared their BIOS settings or installed a bunch of other garbage in Windows that's slowing things down. Probably just power settings, though, or a completely inadequate CPU cooler perhaps. Maybe someone put a Wraith Spire or Wraith Stealth on the 5800X3D? 🙃And that should just support prior suggestions that synthetic benchmarks like Passmark should not be relied upon to provide an accurate representation of performance in real-world applications, as sometimes their scores will see more benefit from certain hardware architectures than from others, in ways that won't always align with other software.

And again, the scores don't make much sense even going by the small difference in clock rates compared to the architecturally similar 5800X, unless there are a lot of poorly configured 5800X3D systems among those submissions. Looking at the distribution of individual 5800X3D results, their overall CPU Mark scores are separated between a relatively tight grouping of higher scoring results, clearly the properly configured systems, and a separate much slower grouping of results, with the group of properly configured systems performing on-average over 25% faster than the misconfigured systems that are holding back results. And while Passmark might want you to believe that 21 submissions from random online users is enough to provide a "low" margin of error, that's complete nonsense. We don't know who tested these systems. Someone could have tested a number of different RAM configurations to see how they affect performance, or an early OEM prebuilt system could be getting sold with slow, single-channel memory, or someone could even be purposely submitting misconfigured results to manipulate the numbers, for all we know.

As for the possibility of these mixed results being the norm, that doesn't appear to be supported by another synthetic benchmarking site that, despite being very obviously anti-AMD right down to their CPU descriptions, shows expected results relative to the 5800X. According to their database of 304 user test runs, the single-threaded performance of the 5800X is only 6% faster than the 5800X3D, as might be expected for an application not benefiting from the additional cache. Passmark is likely a more reputable site, even if they get less traffic, but that's still more evidence suggesting that the current Passmark results are not being representative of real-world performance, and that they are at the very least getting thrown off by a number of questionable submissions affecting their small number of samples.

JarredWaltonGPU

Splendid

Ah, I see what you're getting at. The first charts in the article are for Windows 11 Gaming, followed by Windows 10 Gaming. There are six images in each gallery: 1080p AVG, 1080p 99th Percentile, 1440p AVG, 1440p 99th, Multi-threaded CPU, and Single-threaded CPU. For Win11, the 5800X3D and "overclocked" (with DDR4-3800 memory) 5800X3D are in the top two spots on the first four charts. But because Paul didn't do Win10 testing, and the table of CPUs gets sorted by Win10 performance, the 5800X3D is MIA. I've pinged him to check. It may be that he's working through updated tests on all the major CPUs using Win11, or maybe he'll give in and keep testing with Win10 as well. Either way it's a pain.This is what i am talking about . "It is the main thing that gets looked at in my opinion ."

When you log in, you look at the big list and see whats on top and work your way down !

12900k is at top and 5800X3D is not on it! yes its its Win10 !

The benchmarks are there above it as you have posted with 5800X3D, but that is not the Gaming Cpu Heirarchy List .

I know its Windows 10 and you have said above you wont be doing anymore Win10, but where is a Win 11version?

Intel and AMD Gaming CPU Benchmarks Hierarchy

Gaming CPU Benchmarks Hierarchy - Windows 10

M42

Reputable

Yes, there are going to be some poor Passmark submissions for every processor, including every Alder Lake CPU. As I have mentioned before, when I build systems I try to choose the best performing components to try to create a highly performant balanced system. The results of that effort are usually a system that not only performs measurably better in the applications I run but also scores much higher than the average score in Passmark for a given CPU. So I believe there can be a good performance correlation between applications and some synthetic benchmarks.And that should just support prior suggestions that synthetic benchmarks like Passmark should not be relied upon to provide an accurate representation of performance in real-world applications, as sometimes their scores will see more benefit from certain hardware architectures than from others, in ways that won't always align with other software.

And again, the scores don't make much sense even going by the small difference in clock rates compared to the architecturally similar 5800X, unless there are a lot of poorly configured 5800X3D systems among those submissions. Looking at the distribution of individual 5800X3D results, their overall CPU Mark scores are separated between a relatively tight grouping of higher scoring results, clearly the properly configured systems, and a separate much slower grouping of results, with the group of properly configured systems performing on-average over 25% faster than the misconfigured systems that are holding back results. And while Passmark might want you to believe that 21 submissions from random online users is enough to provide a "low" margin of error, that's complete nonsense. We don't know who tested these systems. Someone could have tested a number of different RAM configurations to see how they affect performance, or an early OEM prebuilt system could be getting sold with slow, single-channel memory, or someone could even be purposely submitting misconfigured results to manipulate the numbers, for all we know.

As for the possibility of these mixed results being the norm, that doesn't appear to be supported by another synthetic benchmarking site that, despite being very obviously anti-AMD right down to their CPU descriptions, shows expected results relative to the 5800X. According to their database of 304 user test runs, the single-threaded performance of the 5800X is only 6% faster than the 5800X3D, as might be expected for an application not benefiting from the additional cache. Passmark is likely a more reputable site, even if they get less traffic, but that's still more evidence suggesting that the current Passmark results are not being representative of real-world performance, and that they are at the very least getting thrown off by a number of questionable submissions affecting their small number of samples.

I believe one reason the 5800X3D has not scored as well is that it cannot be easily overclocked. Many of the online articles on 5800X3D performance were done with stock CPU settings, yet I believe many Passmark submissions were done with overclocked, optimized systems, thus better representing what you can expect with optimal system components. And not all online sites evaluating the 5300X3D show a 7% performance difference between 5800X and 5800X3D in single-thread performance. For example, if you scroll down to the Cinebench R23 single-core test at this link, there is a 12% difference when running stock. The gap would be wider if the 5800X was overclocked. And if you do the math, it shows the 12900ks is 53% faster (2141 vs 1398), which is close to what the Passmark single-thread reports.

AMD Ryzen 7 5800X3D Review: Gaming-First CPU

Making CPU cores faster rather than adding more cores is the best way to boost PC gaming performance. That's why AMD has supercharged their 8-core, 16-thread CPU...

www.techspot.com

www.techspot.com

TRENDING THREADS

-

Question No POST on new AM5 build - - - and the CPU & DRAM lights are on ?

- Started by Uknownflowet

- Replies: 16

-

-

Question Need help choosing a new printer after upgrading to win11 old one doesn't work.

- Started by alphacoyle

- Replies: 2

-

Question Corsair RM850x Question About Zero RPM Mode & Thermals/Fans

- Started by Crag_Hack

- Replies: 5

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.