Intel's Future Chips: News, Rumours & Reviews

Page 55 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Supernova1138

Illustrious

con635 :

^Is broadwell i7 with iris not the 'ultimate' gaming CPU? I thought skylake hid the regression with higher stock clocks?

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

Most gamers will be using a dedicated graphics card, so the Iris Pro integrated graphics are largely moot, having higher clock rates on the CPU would be more important. The only people who really game on integrated graphics are those who can't afford a dedicated GPU, and the i5 5675C and i7 5775C are way too costly to appeal to people on that low a budget.

Apparently Intel is telling the motherboard makers to knock off BCLK overclocking, it probably will still be possible to do it with the unsupported BIOSes available now, assuming Intel doesn't get Microsoft to throw an update into Windows 10 to disable it from the OS level. The Xeons will now be restricted to workstation chipsets, since they are hurting i7 sales. We'll see if motherboard manufactuers do put out some boards with the workstation chipsets more geared towards consumers or not.

Supernova1138 :

con635 :

^Is broadwell i7 with iris not the 'ultimate' gaming CPU? I thought skylake hid the regression with higher stock clocks?

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

Most gamers will be using a dedicated graphics card, so the Iris Pro integrated graphics are largely moot, having higher clock rates on the CPU would be more important. The only people who really game on integrated graphics are those who can't afford a dedicated GPU, and the i5 5675C and i7 5775C are way too costly to appeal to people on that low a budget.

Apparently Intel is telling the motherboard makers to knock off BCLK overclocking, it probably will still be possible to do it with the unsupported BIOSes available now, assuming Intel doesn't get Microsoft to throw an update into Windows 10 to disable it from the OS level. The Xeons will now be restricted to workstation chipsets, since they are hurting i7 sales. We'll see if motherboard manufactuers do put out some boards with the workstation chipsets more geared towards consumers or not.

Not what I was talking about, with dgpu, clock for clock, broadwell is just better than skylake at games, it uses the esram as l4 cache and (apparently) has a better memory controller as its ddr3 only. Skylake, from what I've read, needs 3-400mhz more to match broadwell, I'm sure anandtech done a good article on this.

con635 :

^Is broadwell i7 with iris not the 'ultimate' gaming CPU? I thought skylake hid the regression with higher stock clocks?

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

So Intel have told mobo makers no more bclk oc'ing on non k chips 🙁 and no xeon e3s on consumer boards anyway 🙁

Intel pitched a fit about non Z overclockng. BLCK overclocking is still good, on a Z170 board. Asrock is supposed to release an overclocking C232 board, for the Xeons. My guess is, that they found a loophole, for Xeon overclocking, due to the fact that Z170 boards don't support them. http://www.asrock.com/mb/Intel/Fatal1ty%20E3V5%20Performance%20GamingOC/index.us.asp

Alpha3031

Honorable

What do you think of the 5.1 GHz Xeon?

This seems to be the original source.

I've only brought enough salt for myself, I'm afraid.

This seems to be the original source.

I've only brought enough salt for myself, I'm afraid.

Alpha3031

Honorable

okcnaline :

Doubt it. There's no market for high clock speeds with enterprises. If Intel releases a 5.1 Ghz processor, most likely it'll be an i7, where enthusiasts sit.

Well, Intel thought it was a good idea for the Xeon X5698. High frequency trading, anyone?

okcnaline

Splendid

Alpha3031 :

Welp... 😛 My only defense would be that the X5698 was released back in the time where lithography was large, and Intel couldn't fit more cores, so they just hit the CPU with 4.4 Ghz to hasten things.

gamerk316

Glorious

fudgecakes99 :

turkey3_scratch :

I always recommend the 5820K, can't recall when I ever recommend the 6700K at that price. But then people go, "Blah blah blah 6700K better for gaming blah blah" and I say - wait a second - why aren't you getting an I5 for gaming? An I7 is not meant for sheer gaming. For multi-threaded workstation-oriented tasks, or productivity, or recording and video editing, the 5820K is a no-brainer, but people in their foolishness don't get a 6600K for gaming and then talk crap about the 5820K because the 6700K is better.

Just had to let that out

Just had to let that out

Not entirely, i'd imagine in certain games like say, total war which is pretty heavily cpu intensive it'd outperform a 6600k, but for the most part i'd be hard pressed to choose a 6700k over a 6600k for just pure gaming.in most situations. That said i swear you changed your profile pic to a snake a few days ago? or am i just seeing things?

Here's the difference:

5820k 3.3GHz

6700k: 4GHz

We've been over this: The extra CPU cores don't matter, so the 700MHz base clock speed advantage wins. Even in tasks that do use those extra threads, unless you get near 80% core loading, the extra clock STILL wins out.

Except that the extra threads, the 5820k would win out, if an application took advantage of them. Look at most of the higher core Xeons. They don't have very high clock speeds, because they don't need to. The highest clocked Xeon is a quad core, @3.7ghz. An 18 core Xeon is only clocked @2.3ghz. The multithreaded environments benefit more from the extra cache, and threading. Most games, clock speed doesn't really matter as much, anymore.

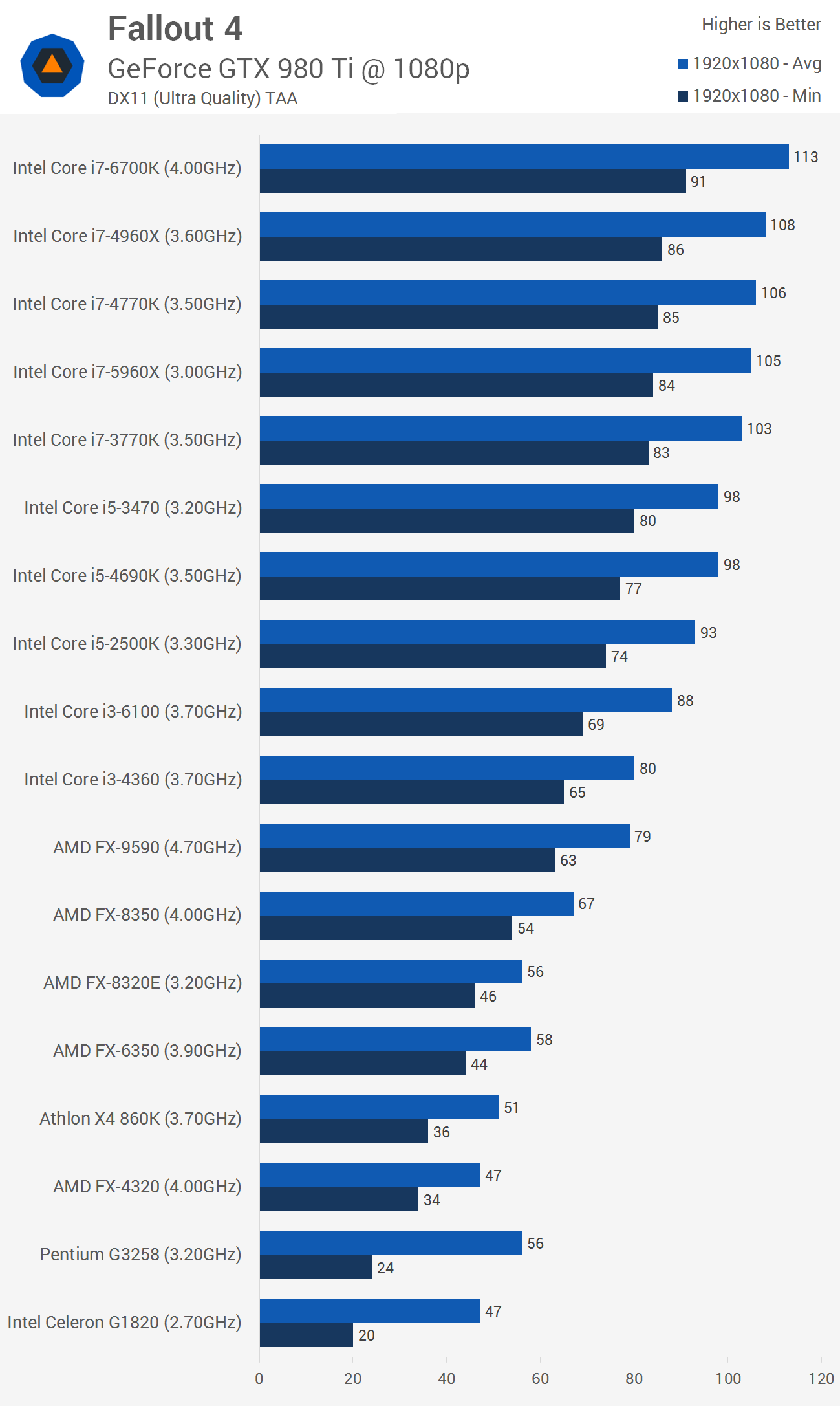

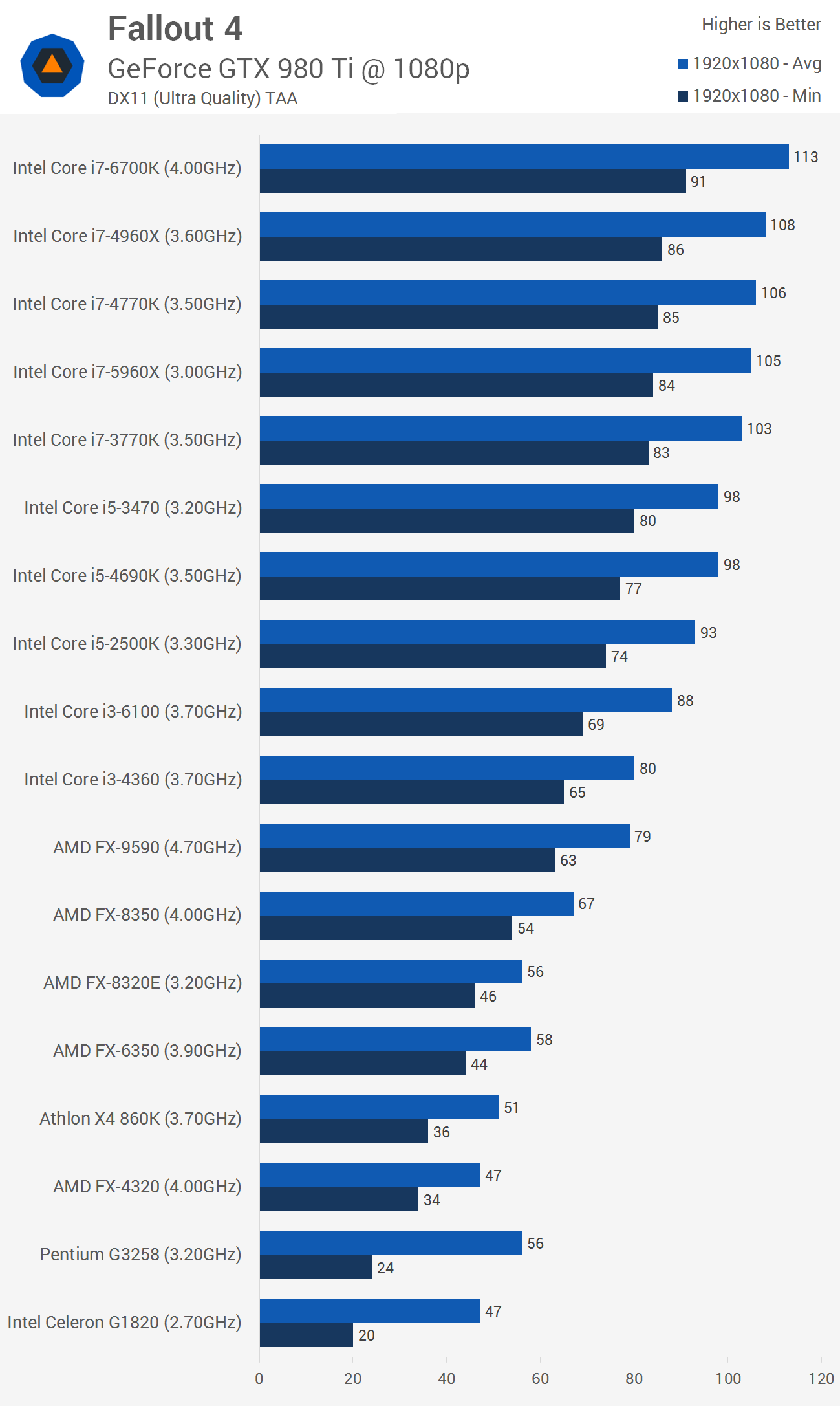

Fallout 4 is an exception, and is affected more by clock speed, but you are not going to notice the difference, with the CPU's in question. Also the 5820k is unlocked, and can hit 4.0ghz easily. Any kind of decent cooling, that you would want for a 5820k, is going to handle it @ 4.0ghz.

Fallout 4 is an exception, and is affected more by clock speed, but you are not going to notice the difference, with the CPU's in question. Also the 5820k is unlocked, and can hit 4.0ghz easily. Any kind of decent cooling, that you would want for a 5820k, is going to handle it @ 4.0ghz.

fudgecakes99

Admirable

gamerk316 :

fudgecakes99 :

turkey3_scratch :

I always recommend the 5820K, can't recall when I ever recommend the 6700K at that price. But then people go, "Blah blah blah 6700K better for gaming blah blah" and I say - wait a second - why aren't you getting an I5 for gaming? An I7 is not meant for sheer gaming. For multi-threaded workstation-oriented tasks, or productivity, or recording and video editing, the 5820K is a no-brainer, but people in their foolishness don't get a 6600K for gaming and then talk crap about the 5820K because the 6700K is better.

Just had to let that out

Just had to let that out

Not entirely, i'd imagine in certain games like say, total war which is pretty heavily cpu intensive it'd outperform a 6600k, but for the most part i'd be hard pressed to choose a 6700k over a 6600k for just pure gaming.in most situations. That said i swear you changed your profile pic to a snake a few days ago? or am i just seeing things?

Here's the difference:

5820k 3.3GHz

6700k: 4GHz

We've been over this: The extra CPU cores don't matter, so the 700MHz base clock speed advantage wins. Even in tasks that do use those extra threads, unless you get near 80% core loading, the extra clock STILL wins out.

nu uh more cpu cores means mores fasts.

fudgecakes99

Admirable

gamerk316

Glorious

Except that the extra threads, the 5820k would win out, if an application took advantage of them. Look at most of the higher core Xeons. They don't have very high clock speeds, because they don't need to. The highest clocked Xeon is a quad core, @3.7ghz. An 18 core Xeon is only clocked @2.3ghz. The multithreaded environments benefit more from the extra cache, and threading. Most games, clock speed doesn't really matter as much, anymore.

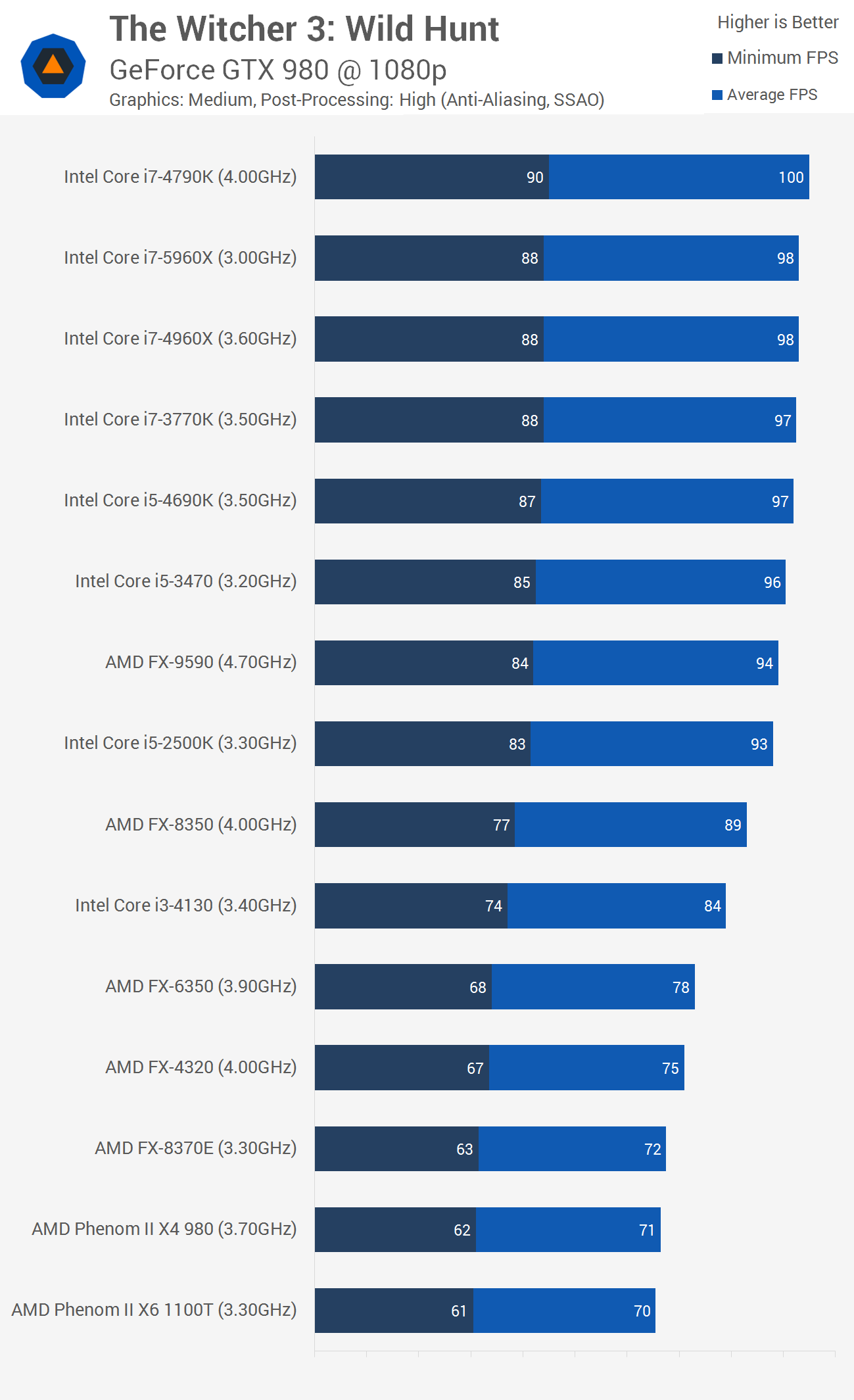

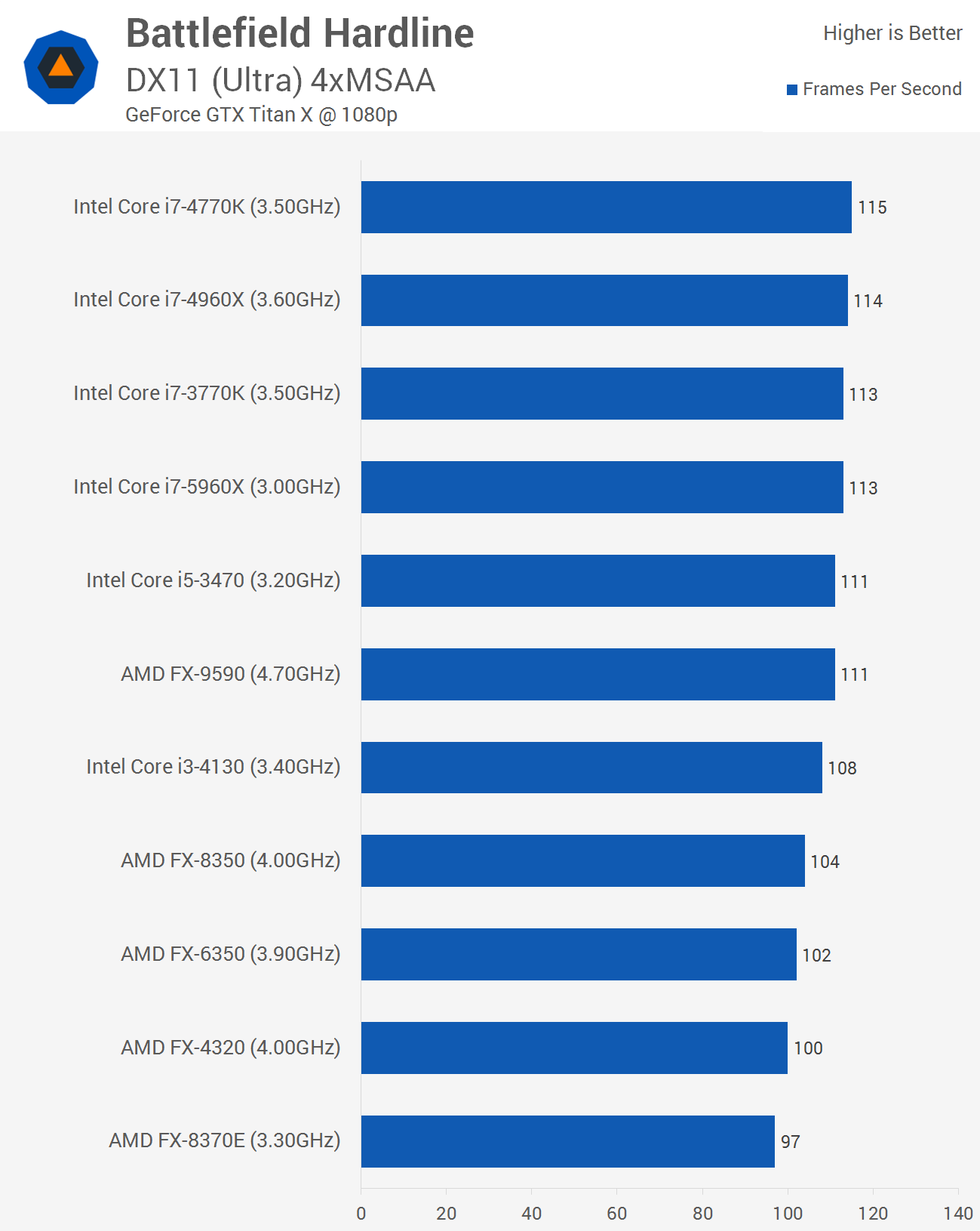

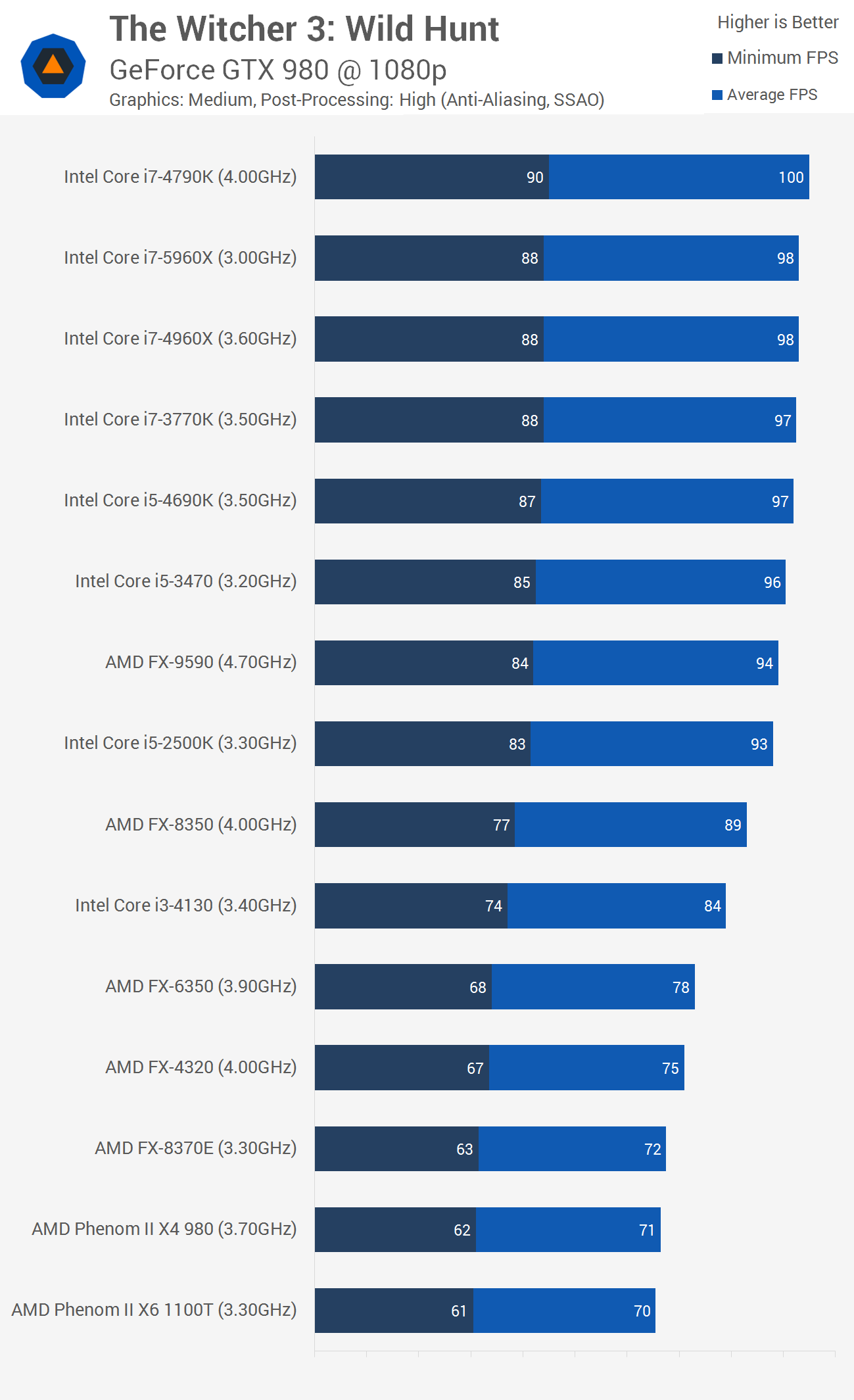

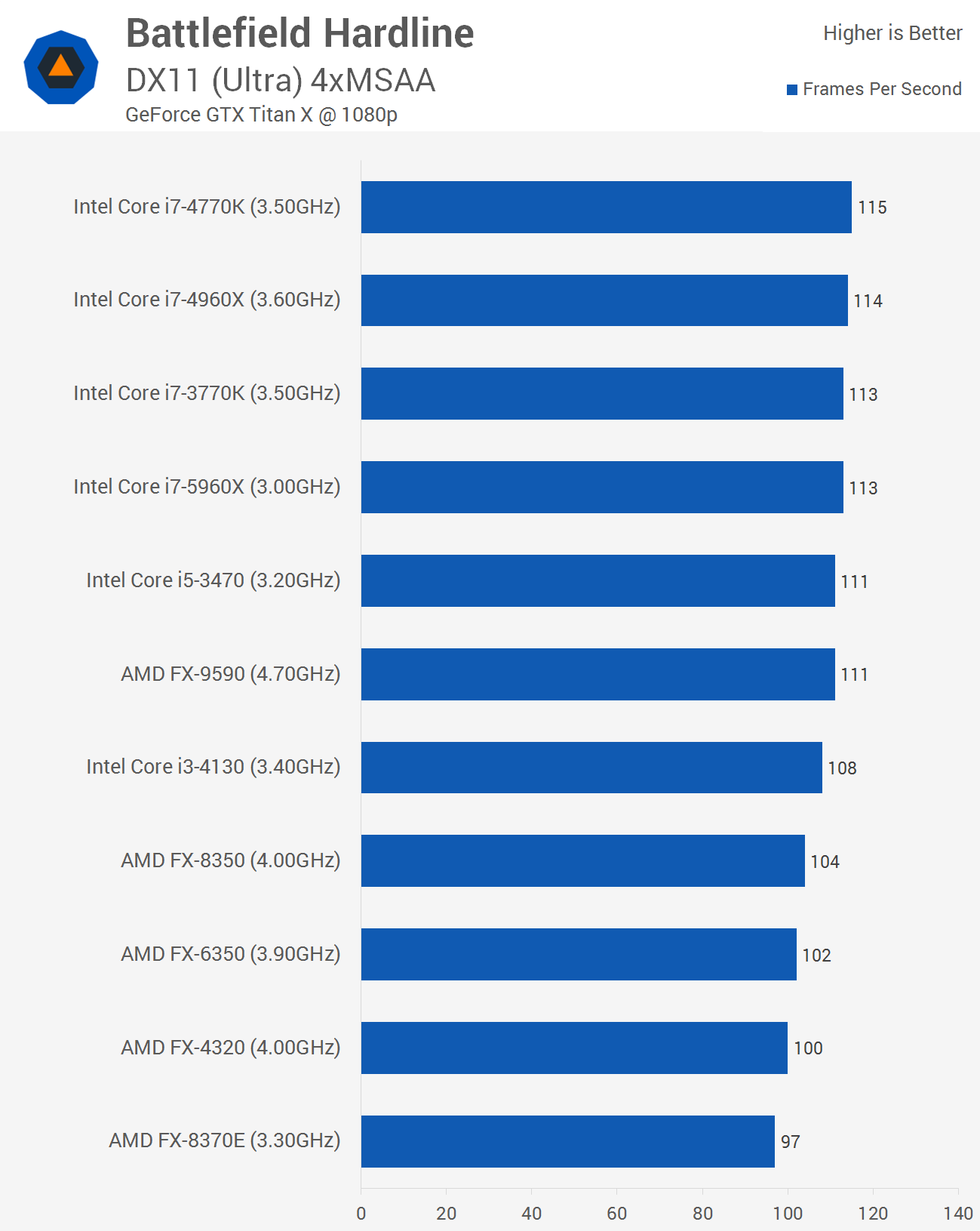

First off, I'm disregarding your gaming benchmarks due to the obvious GPU bottleneck compressing CPU performance at the top. Plop a second GPU in there, and the faster clocked chip would pull ahead by a good margin. CPU benchmarking in games should NEVER be done outside of either low settings or SLI'd configurations, in order to remove the GPU bottleneck compressing top-tier CPU results.

Secondly, there's a difference between adding 12 more cores at the expense of 1000 MHz, and adding two more cores at the expense of 700 MHz. The second is a lot harder to justify even for parallel workloads, as you would expect a 20% performance gain, best case, at full loading across all cores. At < ~75% utilization, the 6700k would beat the 5820k in pretty much any benchmark, assuming no other bottlenecks exist, due to clock speed.

fudgecakes99

Admirable

A real question though? I mean how big would the drop be in comparison of a 6700k and a 5820k? At least in terms of gaming, i don't think their'd be a large enough fps drop to warrant not getting the 5820k. Right?

fudgecakes99 :

A real question though? I mean how big would the drop be in comparison of a 6700k and a 5820k? At least in terms of gaming, i don't think their'd be a large enough fps drop to warrant not getting the 5820k. Right?

There wouldn't be. Also the 5820k can easily match the clock speed. I have yet to see a k series, since sandy bridge came out, that couldn't do 4.0ghz, often on stock voltage.

gamerk316

Glorious

fudgecakes99 :

A real question though? I mean how big would the drop be in comparison of a 6700k and a 5820k? At least in terms of gaming, i don't think their'd be a large enough fps drop to warrant not getting the 5820k. Right?

I saw some benchmarks a year or two ago comparing the 5820k to a 4770k, and there were a few titles where the 4770k was >5 fps faster. So there are measurable impacts to that clock speed edge.

As a general rule, the 6700k and 5820k would perform the same with a single GPU, as they are both *fast enough* where the GPU is the primary bottleneck, but there will be some cases where the 6700k will be faster. But again, throw in SLI, and the 6700k will be consistently faster.

EDIT

I can't help but note, this is basically a repeat of the age old "E8600 or Q6600" argument from the C2D/C2Q days all over again.

okcnaline

Splendid

gamerk316 :

1,2) First off, I'm disregarding your gaming benchmarks due to the obvious GPU bottleneck compressing CPU performance at the top. Plop a second GPU in there, and the faster clocked chip would pull ahead by a good margin. CPU benchmarking in games should NEVER be done outside of either low settings or SLI'd configurations, in order to remove the GPU bottleneck compressing top-tier CPU results.

4) Secondly, there's a difference between adding 12 more cores at the expense of 1000 MHz, and adding two more cores at the expense of 700 MHz. The second is a lot harder to justify even for parallel workloads, 3) as you would expect a 20% performance gain, best case, at full loading across all cores. At < ~75% utilization, the 6700k would beat the 5820k in pretty much any benchmark, assuming no other bottlenecks exist, due to clock speed.

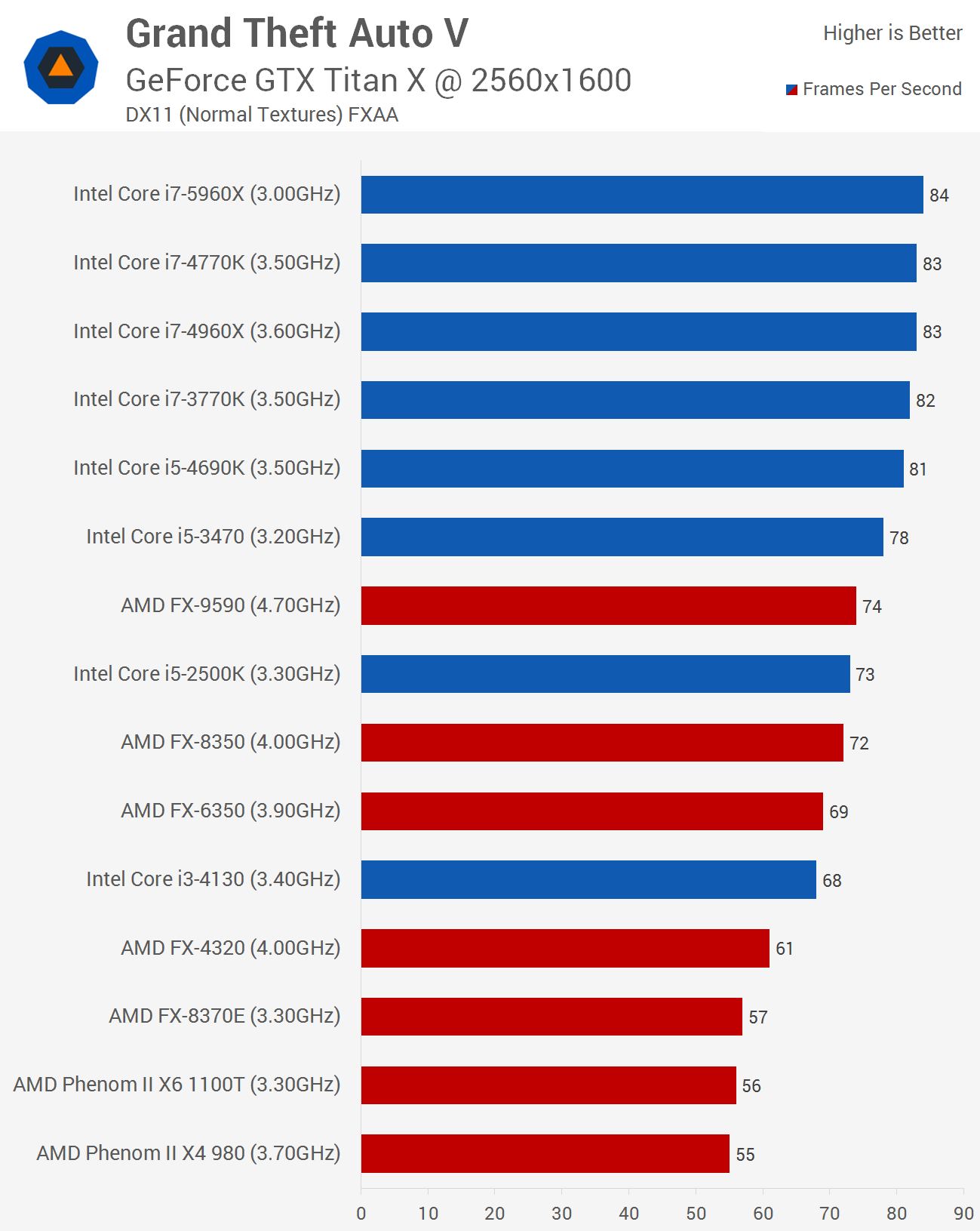

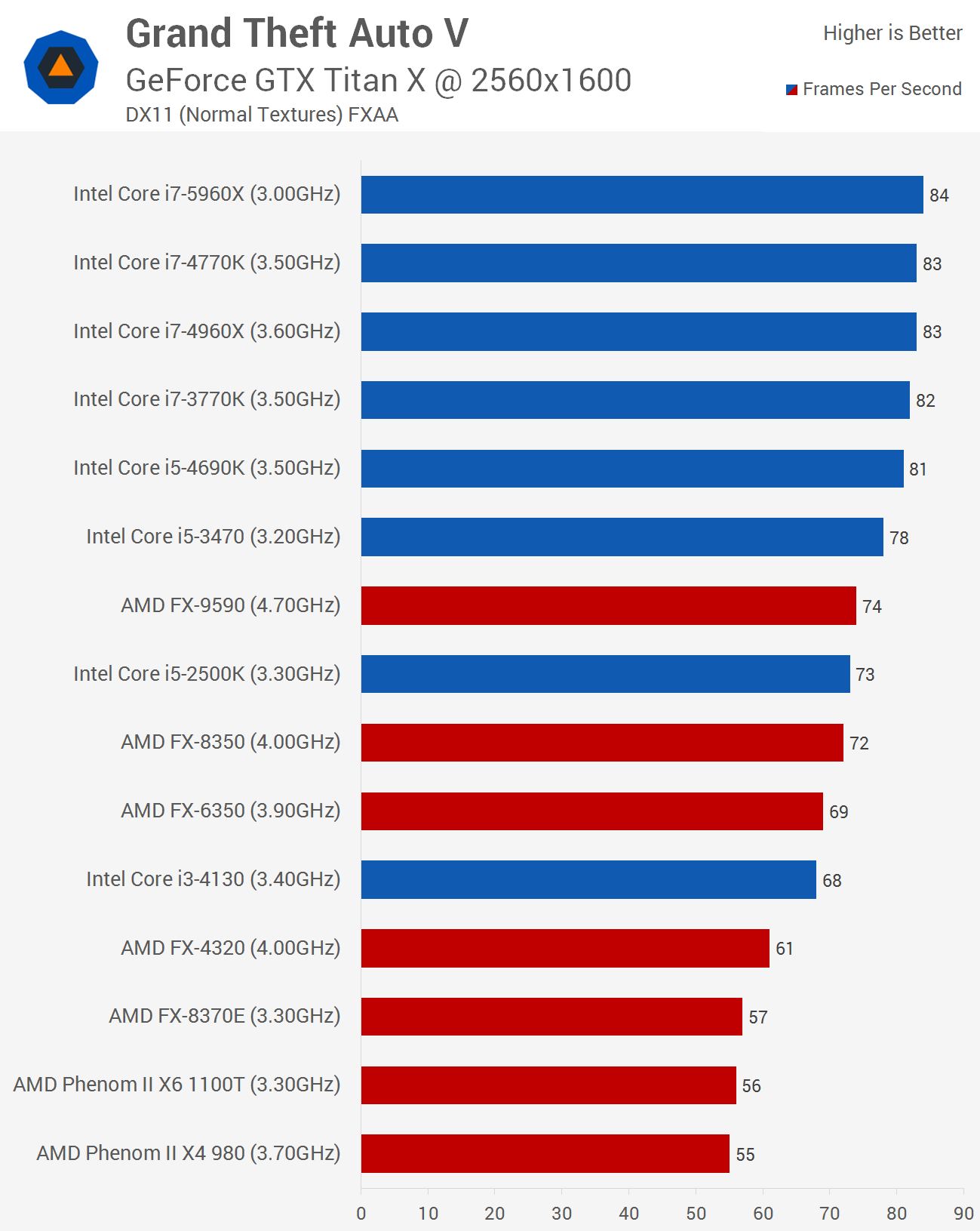

1) GPU bottleneck? GTX 980 and GTX 980 Ti should easily reach >60 FPS in 1080P games. Any games. I see no bottleneck here. Oh, also, how would GPU power help a non-E i7 pull up? That's absurd. If the non-E i7 is already where it is, then that's all there is to it. Or by your statement, that means the i7-5960X would also pull up, right? Won't that ruin your own argument?

2) Do you still have complaints? i7-5960X CLEARLY dominates the scene at 2K and 4K. That's where more cores are utilized. Most of the time, i7-4770K does the job because it's 1080P. Why are you arguing against yourself?

3) That's 20% improvement that would help bring the i7-6700K you dearly support to its knees. i7-5820K is good for 2K and 4K. That's where extra cores are used. That's why I never recommend the i7-6700K for 2K.

4) There's no justifying for slashing 1000Mhz for 10 more cores if it's not worth slashing 200Mhz for 2 cores. Seriously, I don't know how many times I still have to stress about extra cores in this reply.

jimmysmitty

Champion

okcnaline :

gamerk316 :

1,2) First off, I'm disregarding your gaming benchmarks due to the obvious GPU bottleneck compressing CPU performance at the top. Plop a second GPU in there, and the faster clocked chip would pull ahead by a good margin. CPU benchmarking in games should NEVER be done outside of either low settings or SLI'd configurations, in order to remove the GPU bottleneck compressing top-tier CPU results.

4) Secondly, there's a difference between adding 12 more cores at the expense of 1000 MHz, and adding two more cores at the expense of 700 MHz. The second is a lot harder to justify even for parallel workloads, 3) as you would expect a 20% performance gain, best case, at full loading across all cores. At < ~75% utilization, the 6700k would beat the 5820k in pretty much any benchmark, assuming no other bottlenecks exist, due to clock speed.

1) GPU bottleneck? GTX 980 and GTX 980 Ti should easily reach >60 FPS in 1080P games. Any games. I see no bottleneck here. Oh, also, how would GPU power help a non-E i7 pull up? That's absurd. If the non-E i7 is already where it is, then that's all there is to it. Or by your statement, that means the i7-5960X would also pull up, right? Won't that ruin your own argument?

2) Do you still have complaints? i7-5960X CLEARLY dominates the scene at 2K and 4K. That's where more cores are utilized. Most of the time, i7-4770K does the job because it's 1080P. Why are you arguing against yourself?

3) That's 20% improvement that would help bring the i7-6700K you dearly support to its knees. i7-5820K is good for 2K and 4K. That's where extra cores are used. That's why I never recommend the i7-6700K for 2K.

4) There's no justifying for slashing 1000Mhz for 10 more cores if it's not worth slashing 200Mhz for 2 cores. Seriously, I don't know how many times I still have to stress about extra cores in this reply.

1. There are games that struggle to do 60FPS on a 980Ti, I know I have a 980Ti and currently play at 1080P and a few games do drop below 60FPS.

Either way people go both support a purpose. If you want the latest and greatest then normally the enthusiast line is best, the LGA 115X socket. If you want something that is tried, true and has a few benefits for some setups then the LGA 2011 platform is better.

Honestly in the end it is preferential. People are literally arguing over what they prefer to have.

gamerk316 :

fudgecakes99 :

A real question though? I mean how big would the drop be in comparison of a 6700k and a 5820k? At least in terms of gaming, i don't think their'd be a large enough fps drop to warrant not getting the 5820k. Right?

I saw some benchmarks a year or two ago comparing the 5820k to a 4770k, and there were a few titles where the 4770k was >5 fps faster. So there are measurable impacts to that clock speed edge.

As a general rule, the 6700k and 5820k would perform the same with a single GPU, as they are both *fast enough* where the GPU is the primary bottleneck, but there will be some cases where the 6700k will be faster. But again, throw in SLI, and the 6700k will be consistently faster.

EDIT

I can't help but note, this is basically a repeat of the age old "E8600 or Q6600" argument from the C2D/C2Q days all over again.

Actually, the x99 platform is also better geared towards multicard setups. 5820k has more PCI-E lanes, than a 6700k does.

gamerk316

Glorious

1) GPU bottleneck? GTX 980 and GTX 980 Ti should easily reach >60 FPS in 1080P games. Any games. I see no bottleneck here. Oh, also, how would GPU power help a non-E i7 pull up? That's absurd. If the non-E i7 is already where it is, then that's all there is to it. Or by your statement, that means the i7-5960X would also pull up, right? Won't that ruin your own argument?

Obvious GPU bottleneck. We are talking maximum FPS here, not *able to play north of 60 FPS*, which this configuration can obviously do. In all these cases, you see compression at the top of the results, given the fact all the CPUs are pushing the GPU equally hard. As a result, it's impossible to tell the difference between these CPUs; the scores are too compressed because the GPU can't push frames any faster, regardless of how powerful the CPU you are running with is.

2) Do you still have complaints? i7-5960X CLEARLY dominates the scene at 2K and 4K. That's where more cores are utilized. Most of the time, i7-4770K does the job because it's 1080P. Why are you arguing against yourself?

3) That's 20% improvement that would help bring the i7-6700K you dearly support to its knees. i7-5820K is good for 2K and 4K. That's where extra cores are used. That's why I never recommend the i7-6700K for 2K.

Wrong. As far as pushing pixels go, that is purely the domain of the GPU. It takes just as many threads to process 1024x768 as it does to push 4k. All the pixel pushing is purely the domain of the GPU.

Secondly, I specifically noted you have to fully load the 5820k at all times, otherwise the 6700k remains faster. And outside of benchmarks, this typically doesn't happen.

4) There's no justifying for slashing 1000Mhz for 10 more cores if it's not worth slashing 200Mhz for 2 cores. Seriously, I don't know how many times I still have to stress about extra cores in this reply.

Amdahl's law strikes again:

https://en.wikipedia.org/wiki/Amdahl%27s_law

It's quite clear someone hasn't been hanging around here for my half decade of "I told you so" rants in regards to the limits of adding more cores. Based on your argument, the FX-8150 should still be the most powerful consumer grade CPU on the planet. We've been over this time and time again now: Games do not scale, and never will, for reasons I have been trying to explain for 5 years now. And I'm reasonably sure the mods will frown on me going into yet another tangent on that topic.

okcnaline

Splendid

1) Dur... No maximum FPS. It's average. You now have nothing to say since both of us read the graph incorrectly.

2) It doesn't take as much threads to do 768P as 4K. Physics would be much less requiring.'

3) Sigh... I don't know if you're bothering to read my reply. I said that where more threads are utilized (e.g.: video editing), more threads are better. In 4K, that apparently happens. That's why the i7-5820K pulls more FPS than i7-6700K at 2K or 4K.

4) No, I don't subscribe to the AMD fanboy logic. More cores doesn't equal more FPS. FX-8150 isn't the most powerful. What I said was, where games benefit from more threads, it's there that you pull more FPS if you had more cores. i5 is king at 1080P because it has enough single-threaded performance and cores. At 2K and 4K, like I said, i7-5820K has enough single-threaded performance and cores to do well there. At 720P, games were satisfied with Core 2 Duo. It's a combination of single-threaded performance and cores, not just cores.

2) It doesn't take as much threads to do 768P as 4K. Physics would be much less requiring.'

3) Sigh... I don't know if you're bothering to read my reply. I said that where more threads are utilized (e.g.: video editing), more threads are better. In 4K, that apparently happens. That's why the i7-5820K pulls more FPS than i7-6700K at 2K or 4K.

4) No, I don't subscribe to the AMD fanboy logic. More cores doesn't equal more FPS. FX-8150 isn't the most powerful. What I said was, where games benefit from more threads, it's there that you pull more FPS if you had more cores. i5 is king at 1080P because it has enough single-threaded performance and cores. At 2K and 4K, like I said, i7-5820K has enough single-threaded performance and cores to do well there. At 720P, games were satisfied with Core 2 Duo. It's a combination of single-threaded performance and cores, not just cores.

- Status

- Not open for further replies.

TRENDING THREADS

-

News AMD estimates of Radeon RX 9070 XT performance leaked: 42% – 66% faster than Radeon RX 7900 GRE

- Started by Admin

- Replies: 53

-

Question This noise is coming from somewhere in my PC and I tried all this. Any help is appreciated.

- Started by Eershaa

- Replies: 20

-

-

News Some RTX 5090s are shipping with missing ROP units, leading to less gaming performance: Report

- Started by Admin

- Replies: 81

-

News Nvidia RTX 5080 suffers from similar missing ROP defect as 5090 and 5070 Ti: Report

- Started by Admin

- Replies: 20

-

Review Nvidia GeForce RTX 5070 Ti review: A proper high-end GPU

- Started by Admin

- Replies: 82

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.