jimmysmitty

Champion

Vogner16 :

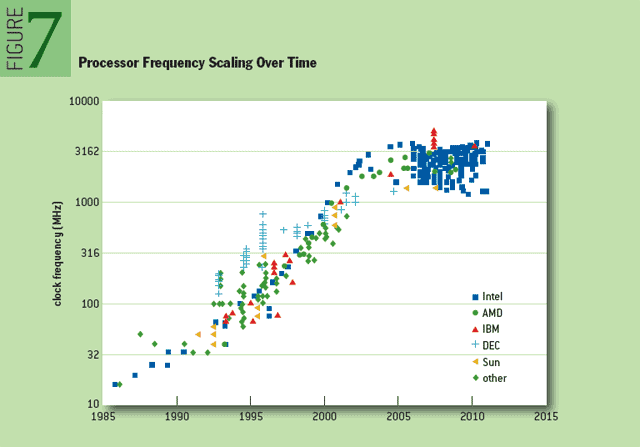

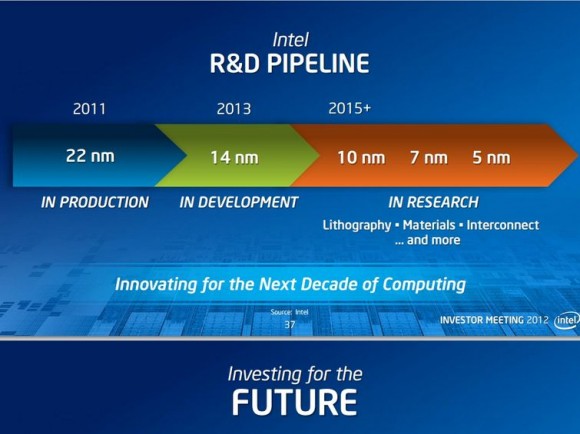

In my opinion Intel will hit the limit one day . Making transistors is very hard to make in smaller dimensions . So they'll use crazy designs and different materials for more performance .

I believe that as intel hits this performance wall (2.5% increase in IPC each gen now) we will see intel not trying to improve their core design to improve FP or integer performance per say but more on the lines of ASIC's for specialist tasks which remove threads from the cpu's work load. take for example amd APU's. they integrate a UVD to remove video decode from the cores to a specialist section of the silicon. makes video decode faster than what cpu could do and allows the cpu to be open for other compute tasks.

of course this needs software to use specialist asic's on a cpu die but intel has the market by the balls... just snap you fingers!

security decode asic's already here. video decode asic's already here. compression asic? this stuff is whats next. not improvement in per core performance! or adding more transistors per core! sure they will add a few, but you get my point. asic's are the future for computing

Intel already had a ASIC for video decode though, QuickSync which was pretty damn good compared to a lot of competition.

But you are probably correct. They have hit a wall an until that wall can be taken down they will have to focus on ASICs.

I am sure though they will break the wall some day and continue a performance gain. They have to otherwise we will never get out holodecks from Star Trek.