jimmysmitty

Champion

juanrga :

gamerk316 :

The root problem is simple: assuming the fabs skip 10nm (which some are planning to do), 7nm is likely the last die shrink before physics prevents any farther progress.

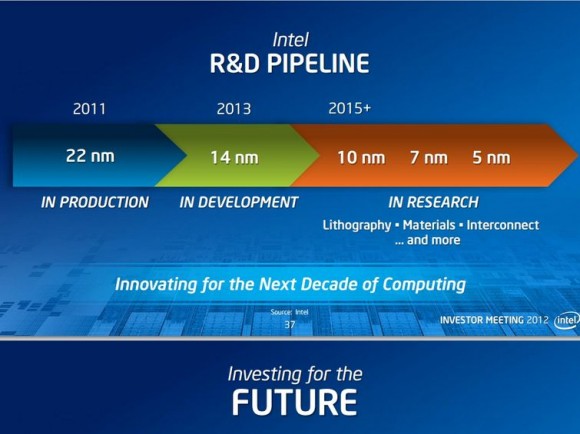

Only Glofo is skipping their "10nm" node. Intel, TSMC, and Samsung are releasing "10nm" nodes. Also "7nm" is not the end. The ORTC (Overall Roadmap Technology Characteristics) of the last IRTS (International Technology Roadmap for Semiconductors) goes up to "1.8nm" industry label. Intel is already working in "5nm"

I guess one other thing to keep in mind is the interconnect. Omnipath is something that should become interesting but consumers might not see that for a while as we don't quite need it yet.