obsidianaura :

Thanks for the info from all of you.

Looks like i'll stick with SATA2 ports for the time being.

The reason I'm stripping them is not just for the performance increase but because they're only 120GB in size (cheaper than buying 1 big drive) and I need them all in a single partition. I'm not concerned about the 3 points of failure TBH. I've got a decent enough backup anyway.

I've been using RAID0 on various breeds of Raptor Drives for nearly 10 years and have never run into problems.

Jaquith when you say the risk of failure is exponential in RAID0 I don't know what would cause that? I thought having 3 drives would just tripple the risk?

As for the Trim function not working in RAID0, the garbage collection should still work so when the computers idle it should still do the task, is that right?

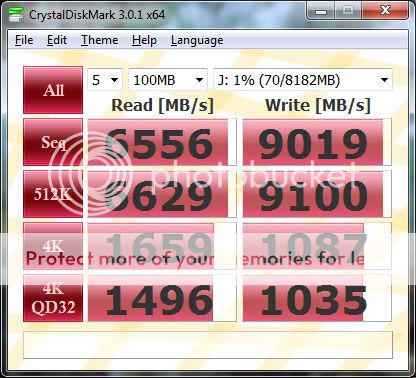

Are people saying there's no performance increase from putting the SSDs in RAID0?

Some time back, I had a Intel X25-M 80gb ssd. I needed more space for my "c" drive, so I bought a second and used raid-0 to get a larger single image.

It worked well enough, but I could detect NO better performance. Yes, the sequential benchmarks were better. Then, I had a better use for those two 80gb drives, and I replaced them with a single 160gb drive. If anything, I felt it was a bit faster.

Larger drives have more nand chips so they can access more chips in parallel. Sort of an internal raid-0 if you will.

But, the value in a ssd is random access times, which we do mostly, not sequential.

To solve your single image problem, I think you can use software to aggregate a number of drives into a single image.

I have not done this, so I can't comment on any issues.

Or, just buy a single larger ssd. The larger the ssd, the longer it will last(not really an issue), and the better it will take to updates. Regardless, you do not want any ssd to get past 80% full. Once you do, there will be extra overhead in finding free nand blocks for updates and deletions.

Perhaps, it might be better in this case to buy a 240gb ssd and a second 120gb ssd if you can allocate your files reasonably between them. It might be the case if you have 300 gb of data to store that you really need a 500gb ssd. Remember that a 120gb ssd will have a max useable capacity that is less, perhaps 110gb.