First picture is me doing userbenchmark after setting up raid0. Second picture is a bench of when my 2 nvme sticks were not in raid.

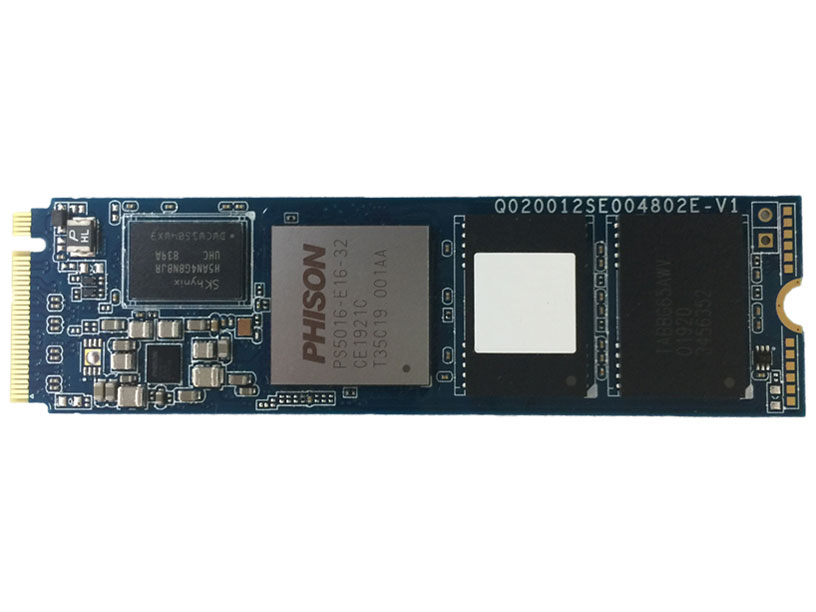

I am using two 1TB nvme GEN4 sticks called CSSD-M2B1TPG3VNF 1TB, yes weird name, not known brand but they are mostly sold in japan only as far as I know, and they are good and reliable at a fair price.

My hopes were that going into RAID0 would increase speeds, but I wasn't expecting much. However, looking just at the numbers it seems it might actually be slower, would I be correct in assuming this? Yes sequential speeds are far faster, but its mostly write speed only, and random 4k speeds are quite a bit lower. Since nvme drives seem to be somewhat "bottlenecked" by their random 4k speeds anyway this would result in a bit of decrease in performance.

My question would be why? Am I lacking in drives somehow? I haven't installed any particular nvme drives, just ones for raid capability. I believe Samsung 970 evo series have drives that increase performance quite a bit, and wondering if there is there is a general version that applies to other nvme sticks. If my bench results seem to be off, do anyone have any recommendations on how to improve? I have a x570 MSI gaming edge wifi motherboard, and they are supposed to have "storage boost" function but downloading their latest dragon center application this is nowhere to be found.

Anyway I want to be clear, that I am OK somewhat if this looks like a small decrease in overall speed. That's because for me, having my two main drives combined into 1 adds way too much convenience, so I never have to worry in which drive something is stored or installed. I don't want to have to keep track of separating different types of files for different drives. Ill get a sata hdd later for pure storage.

What Im mostly curious is, does this look like a significant drop in speed? And if so, would there be anything I could do to increase it? Or do you think I should never ever RAID0 on two nvme drives? Let me hear your thoughts.

Thanks.