aldaia :

juanrga :

-Fran- :

juanrga :

goldstone77 :

juanrga :

aldaia :

Goldstone that slide you posted must be fake. MisterKnowsAll said:

Unlike Glofo, Intel did never promise 10nm for 2015.

The slide he gave clearly says prototypes, not volume production of final chips.

That's what the article is saying there is no 10nm viable for production.

The article is wrong. 10nm chips are in production actually.

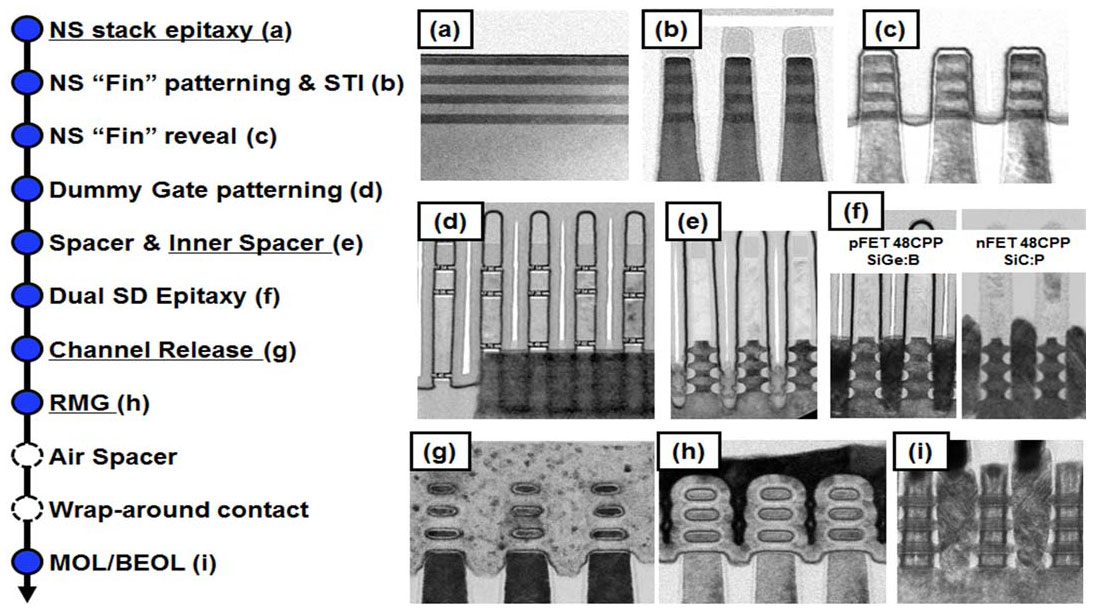

But I was discussing the above slide. That slide is from a talk given in 2014 by Intel

https://www.wesrch.com/electronics/paper-details/pdf-EL1SE1V8OYHLG-intel-custom-foundry-competing-in-today-s-fabless-eco-system#page11

The slide says that prototype silicon will start in 2015, not that volume production in final silicon would start in 2015.

Didn't GloFo have 7nm prototypes in 2015 as well? I vaguely remember an article or news somewhere stating that.

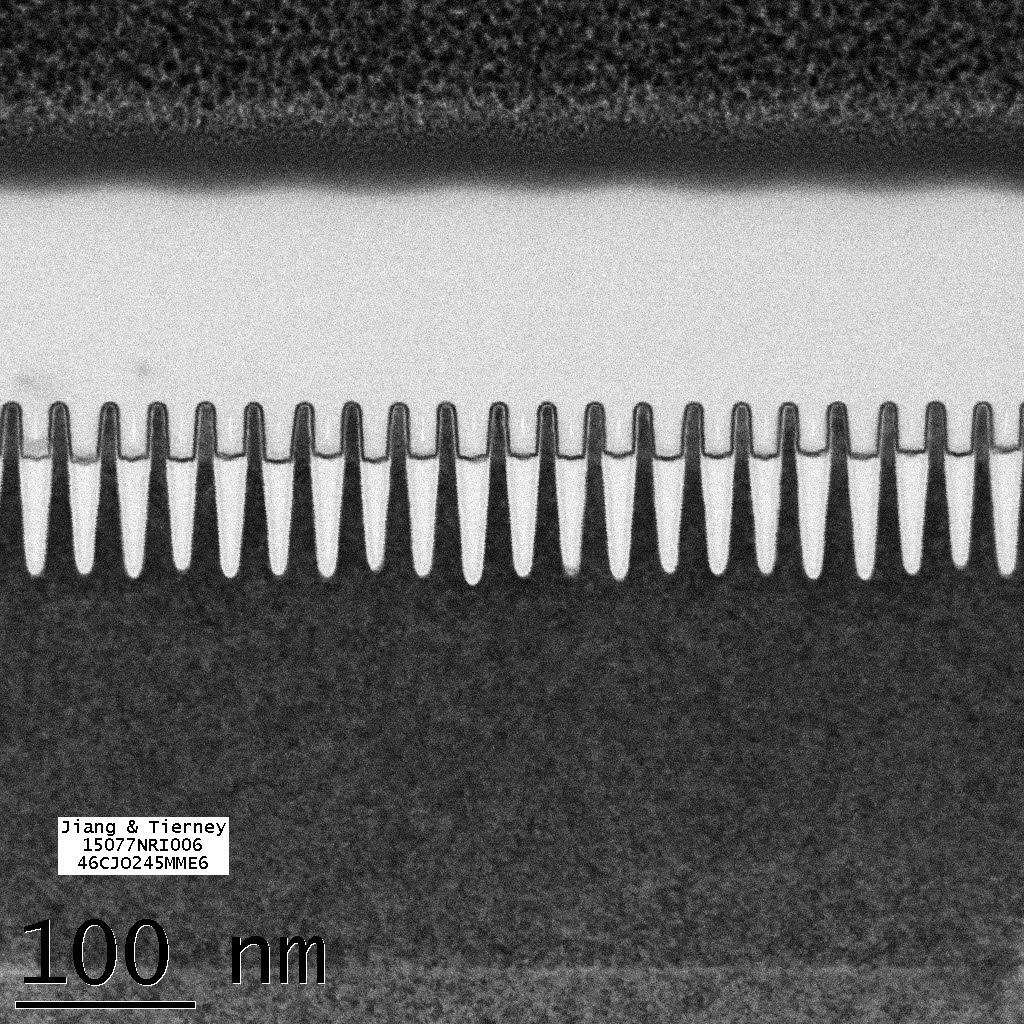

Sure no! And I guess you mean that IBM fabricated a 7nm transistor back in 2015

Single transistor != prototype chip

I'm sure he doesn't mean that.

IBM had already fabricated a 6 nm transistor in 2002 !!!

https://www.theinquirer.net/inquirer/news/1034321/ibm-claims-worlds-smallest-silicon-transistor

And in fact IBM did produce a 7nm prototype chip in 2015.

Yes, you are right. IBM did a 7nm test chip in 2015. But we are still awaiting IBM to launch a 14nm commercial chip.

-Fran- :

Thanks, yes. I did remember GloFo (IBM at that time still?) had a 7nm prototype.

No Glofo didn't.

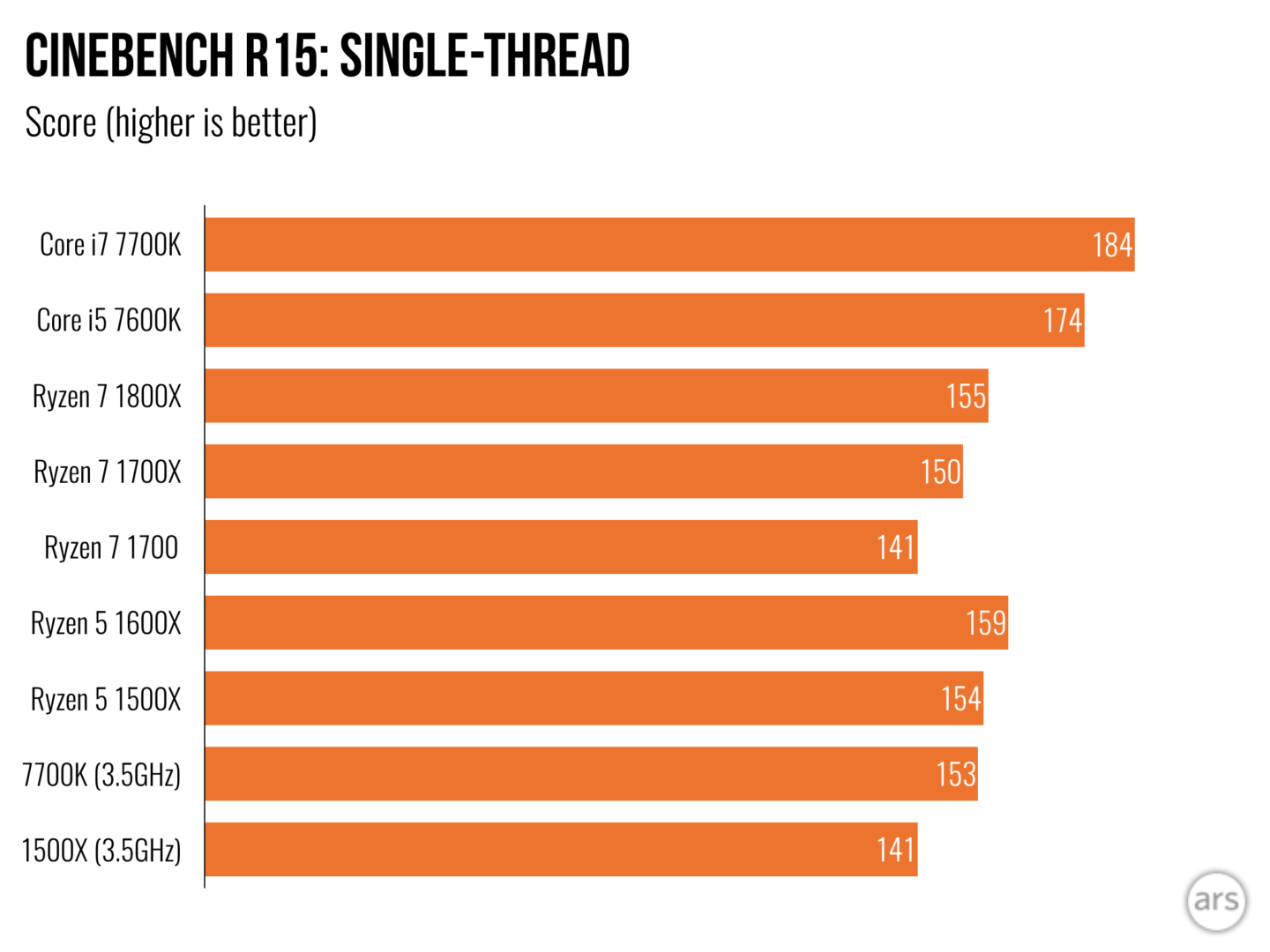

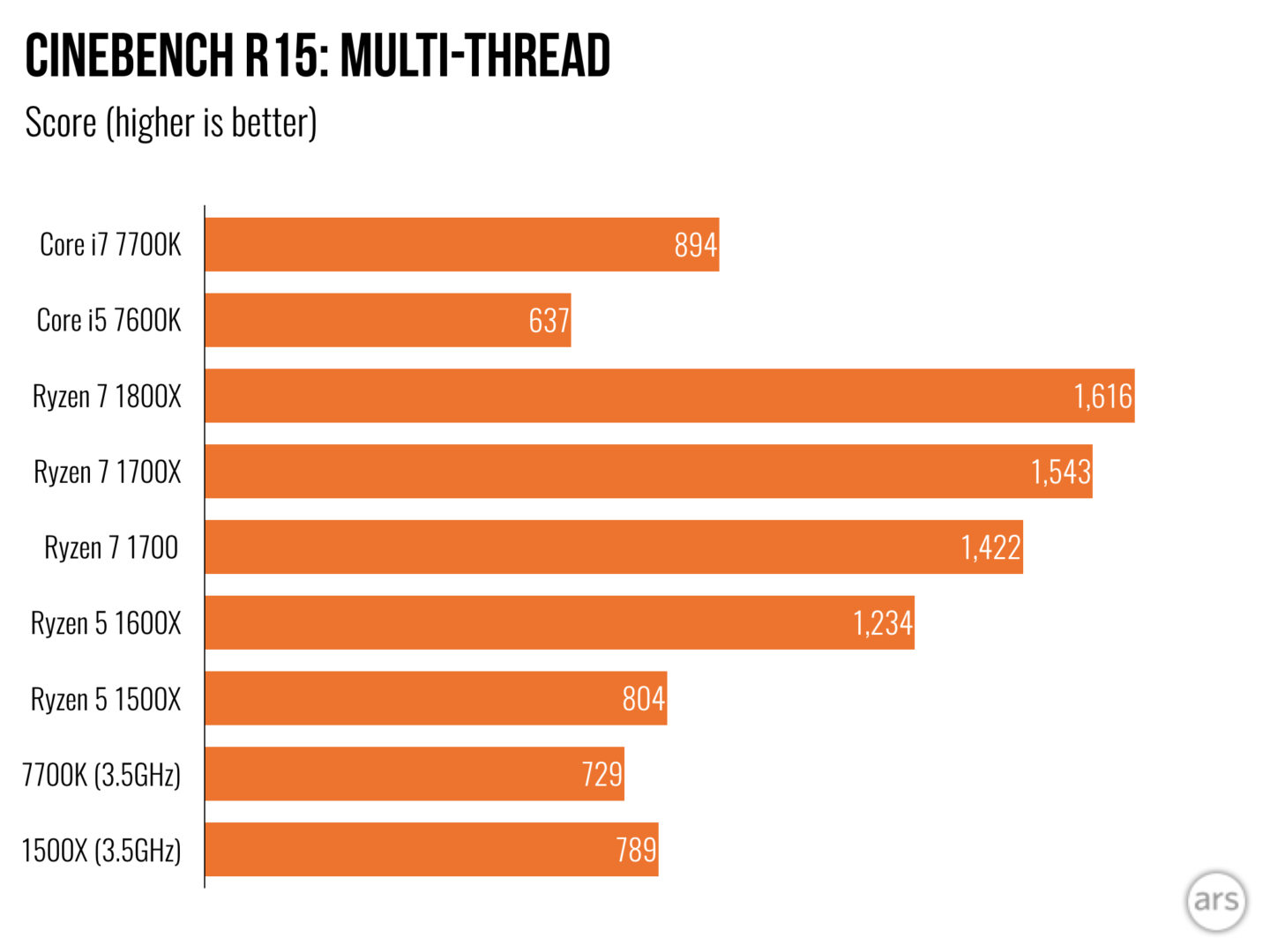

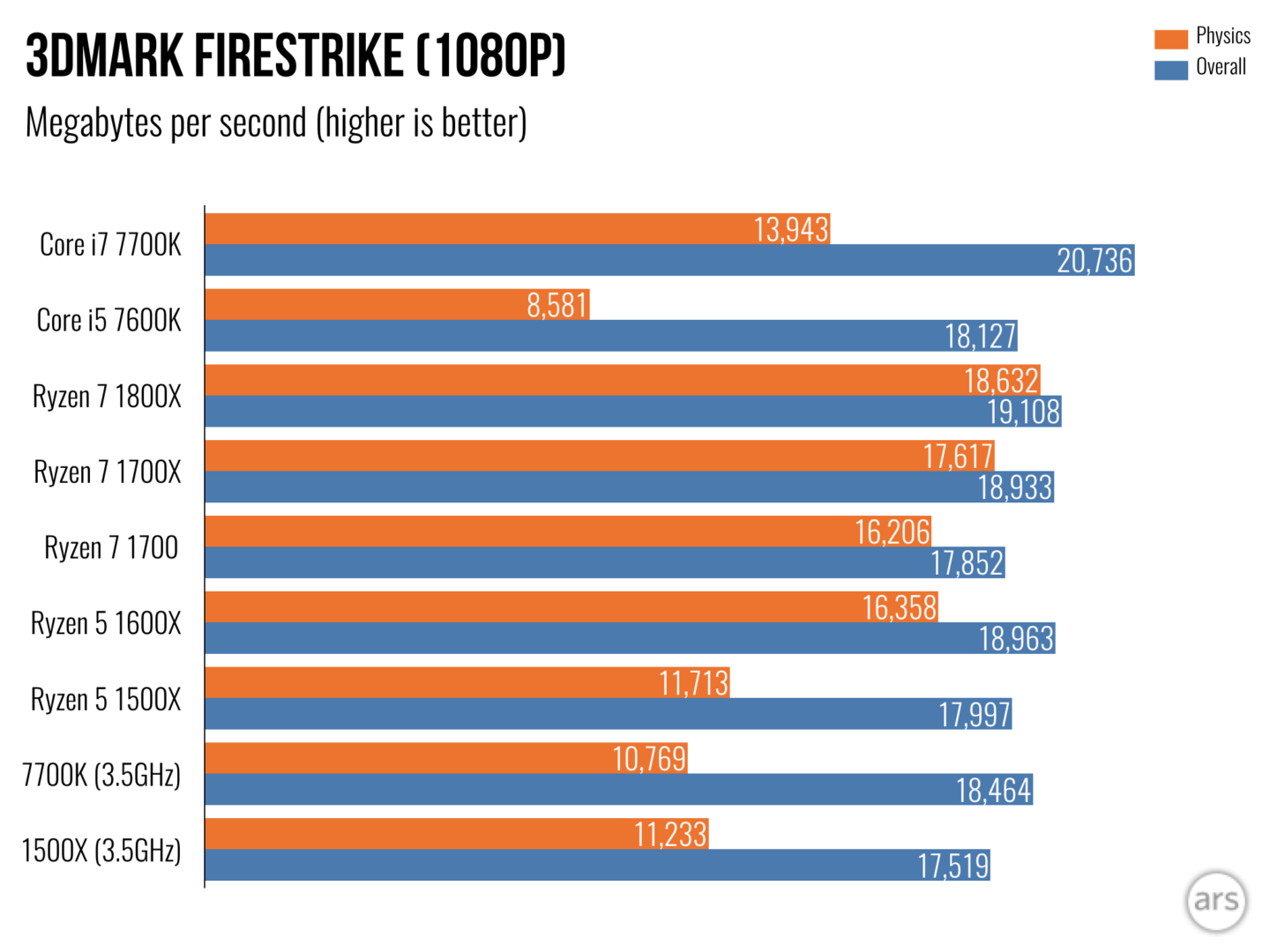

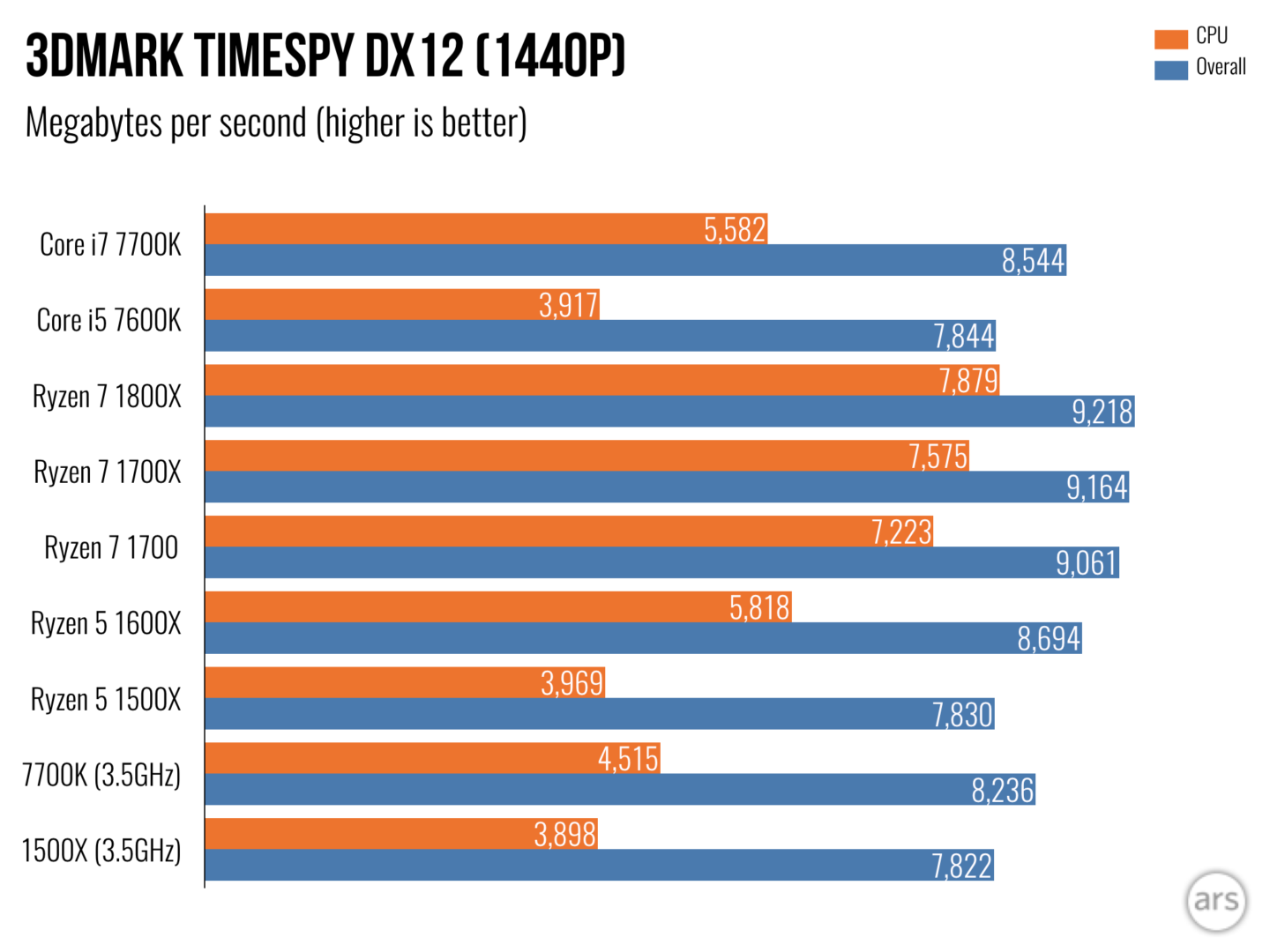

needs 16 cores and 32 threads for games and streaming?, that's the most stupid thing that AMD had said in a while, just because they lack IPC so they are telling their customers go ahead and buy Threadripper for gaming and streaming... We are not quite there yet you don't need 32 threads. For most of us Threadripper is unnecessary(though that should change over time). At low resolutions, the Intel advantage is very significant, and it trails off as you run games at higher resolutions and detail settings ONLY (because the graphics card becomes the performance bottleneck).

needs 16 cores and 32 threads for games and streaming?, that's the most stupid thing that AMD had said in a while, just because they lack IPC so they are telling their customers go ahead and buy Threadripper for gaming and streaming... We are not quite there yet you don't need 32 threads. For most of us Threadripper is unnecessary(though that should change over time). At low resolutions, the Intel advantage is very significant, and it trails off as you run games at higher resolutions and detail settings ONLY (because the graphics card becomes the performance bottleneck).